Introducing Dolly 2.0: Unlocking the Full Potential of Open-Source Language Models

Introduction

Databricks has unveiled a game-changer in the world of artificial intelligence — Dolly 2.0, the first open-source, instruction-following large language model (LLM) available for commercial use. But what makes Dolly 2.0 so revolutionary, and how can organizations leverage its capabilities to drive innovation? This comprehensive guide delves into the technical prowess, compelling strengths, and diverse applications of this powerful AI model, while also exploring how LLM API can overcome its limitations.

What is Dolly 2.0?

Dolly 2.0 is the latest breakthrough in large language models (LLMs) developed by Databricks. Building on the success of their earlier Dolly 1.0 model, Dolly 2.0 is the first open-source, instruction-following LLM available for commercial use.

Technical Details of Dolly 2.0

Dolly 2.0 is a 12-billion parameter model fine-tuned on a new dataset called databricks-dolly-15k. This dataset was painstakingly created by over 5,000 Databricks employees, who generated 15,000 high-quality prompt-response pairs specifically designed to train an instructional LLM. Unlike previous datasets, databricks-dolly-15k is fully licensed for commercial use under a Creative Commons license.

This new dataset called databricks-dolly-15k, contains 15,000 high-quality prompt-response pairs specifically designed for instruction tuning of large language models. The dataset was crowdsourced from over 5,000 Databricks employees during March and April 2023, who were incentivized through a contest to generate a wide range of prompts and responses covering tasks like open-ended Q&A, closed-book Q&A, information extraction and summarization, brainstorming, classification, and creative writing.

Exceeding their initial 10,000 target, Databricks leveraged gamification to rapidly collect this sizeable dataset, which is crucially licensed for commercial use under a Creative Commons license, unlike previous instruction datasets.

Why did Databricks make Dolly 2.0 commercially viable?

Databricks’ journey to create a commercially viable instructional LLM was driven by customer demand. When Dolly 1.0 was released, the top question was whether it could be used commercially — but the underlying dataset had terms of service prohibiting that. To solve this, Databricks crowdsourced the new databricks-dolly-15k dataset, leveraging over 5,000 enthusiastic employees to generate high-quality, original prompt-response pairs.

The result is Dolly 2.0 — a powerful, open-source LLM that any organization can use, modify, and build upon to create domain-specific AI assistants and applications. Databricks believes this approach of open, community-driven AI development is critical to ensuring AI benefits everyone, not just a few large tech companies.

The Strengths of Dolly 2.0

Customizable Fine-Tuning Capabilities

Unlike managed large language models (LLMs) like ChatGPT, Dolly 2.0 provides users with full control over the fine-tuning process. Rather than being constrained by per-token or per-record charges imposed by managed service providers, users can fine-tune the pre-trained open-source Dolly 2.0 models to their specific needs without incurring additional fees. Crucially, Dolly 2.0 users also have complete access to evaluation metrics and a clear understanding of the model’s behavior, empowering data scientists to feel more comfortable and confident when working with the technology.

Scalable and Adaptable Infrastructure

Dolly 2.0 offers users the freedom to deploy the models on their preferred cloud or on-premise infrastructure, providing the flexibility to choose the deployment environment that best suits their needs. When the need arises for improved latency or increased throughput, users can effortlessly scale up or scale out their infrastructure on demand by provisioning additional cloud resources. This ability to dynamically scale is particularly valuable for organizations with variable workloads. This level of infrastructure flexibility is not typically available with managed service LLMs, where users are limited to the provider’s own scaling capabilities.

Secure and Confidential Data Handling

For industries with strict data privacy and confidentiality requirements, such as finance and healthcare, Dolly 2.0 presents a more secure alternative to externally hosted managed service LLMs. When fine-tuning the Dolly 2.0 models, users can do so without exposing any of their confidential data to third-party providers. Additionally, the inference can be performed entirely within the user’s own secure servers, ensuring that sensitive information never leaves their controlled environment. This stands in contrast to managed services like ChatGPT, where users must trust the service provider to maintain the necessary data security posture and comply with relevant regulations.

Unrestricted Commercial Utilization

Dolly 2.0’s Apache 2.0 license grants users the freedom to use the models for any commercial purpose without restrictions. This open and permissive licensing enables organizations to freely sell products or deploy services that leverage the Dolly 2.0 models, without the need to pay royalties or navigate complex licensing agreements. This flexibility is not always present with other open-source large language models, which may come with more restrictive usage terms or require licensing fees for certain commercial applications.

Dolly 2.0 Commercial Applications

Customizable AI Assistants

With Dolly 2.0 being an open-source, commercially-viable instruction-following language model, organizations can leverage it to build tailored AI assistants for their specific needs. Rather than being limited to generic chatbots or assistants, companies can fine-tune and customize Dolly 2.0 to provide domain-specific support for their employees and customers.

For example, a financial services firm could take Dolly 2.0 and further train it on their internal policies, product information, and customer service data. This would allow them to deploy a highly personalized AI assistant that can handle a wide range of customer inquiries, from account management to investment advice, all while maintaining compliance with company standards.

Content Creation and Ideation

Dolly 2.0’s broad instruction-following capabilities make it well-suited for content creation and ideation tasks. Businesses in fields like marketing, advertising, and media could use Dolly 2.0 to generate initial drafts of articles, social media posts, creative briefs, and more. The model’s ability to summarize information and brainstorm new ideas could significantly accelerate the content production process.

A marketing agency, for instance, could leverage Dolly 2.0 to rapidly prototype campaign concepts, write sample social media copy, and even produce initial creative assets like taglines and slogans. Humans could then refine and polish the model’s outputs to meet their specific brand and messaging requirements.

Automated Data Analysis

Organizations with large datasets, such as market research firms or business intelligence teams, could employ Dolly 2.0 to automate certain data analysis and reporting tasks. The model’s competency in extracting key information from text, answering targeted questions, and summarizing insights could help generate initial analytical findings that humans can then validate and expand upon.

This could reduce the time and effort required to transform raw data into actionable intelligence, allowing analysts to focus more on high-level interpretation and strategic recommendations rather than low-level data processing.

The open-source and commercially-friendly nature of Dolly 2.0 opens up a wide range of potential use cases across industries, empowering organizations to create customized AI solutions that meet their unique needs and priorities. As Databricks has emphasized, this approach aims to ensure that the benefits of advanced language models are accessible to a broader community, not just a few large technology companies.

How to get started with Dolly 2.0?

If you want to get started with using Dolly 2.0 without training the model, instructions are as follows:

- The pre-trained Dolly 2.0 model is available on Hugging Face as

databricks/dolly-v2-12b.

2. To use the model with the Transformers library on a machine with A100 GPUs:

from transformers import pipeline

import torch

instruct_pipeline = pipeline(model="databricks/dolly-v2-12b", torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto")

You can then use the instruct_pipeline to generate responses to instructions.

3. For other GPU instances:

(1) A10 GPUs:

- The 6.9B and 2.8B parameter models should work as-is.

- For the 12B parameter model, you need to load and run the model using 8-bit weights, which may impact the results slightly.

(2) V100 GPUs:

- Set

torch_dtype=torch.float16in thepipeline()command instead oftorch.bfloat16. - The 12B parameter model may not function well in 8-bit on V100s.

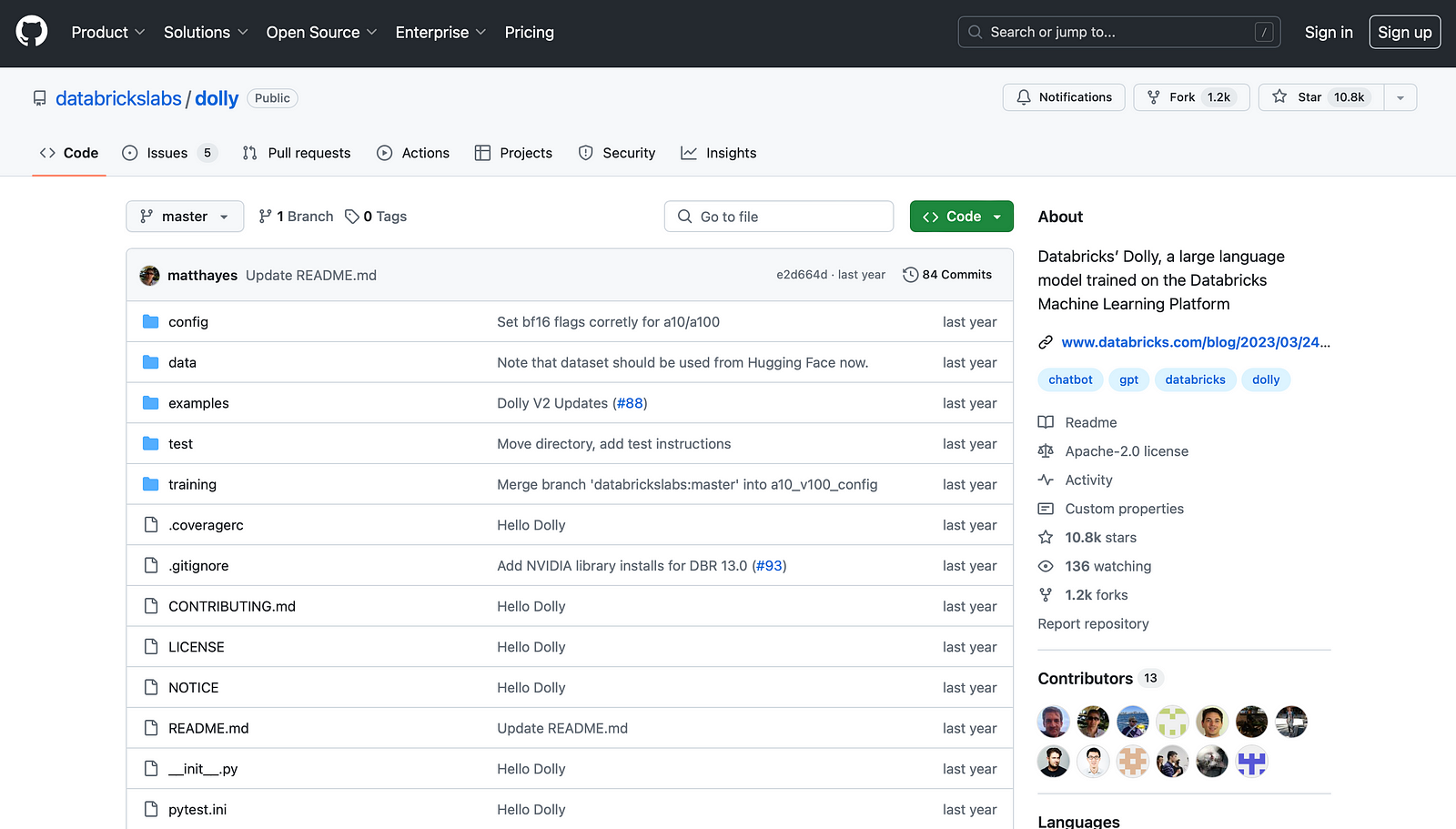

The key points are that the pre-trained Dolly 2.0 model is available on Hugging Face, and you can use the Transformers library to load and use the model for response generation. However, the specific configuration may need to be adjusted depending on the GPU hardware you have available. For more info, you can visit databrickslabs/dollyon Github.

Limitations and Shortcomings of Dolly 2.0

While Dolly 2.0 represents a significant advancement in open-source, commercially-viable instruction-following language models, it is not without its limitations.

Language Limitations

One key shortcoming is the model’s lack of extensive training in languages beyond English. Neither Dolly 2.0 nor its underlying Pythia backbone have been extensively trained on non-English datasets. This means that applications requiring multilingual capabilities would likely need to undertake substantial fine-tuning efforts to capture the nuances of other languages, which may not be a viable strategy given the innumerable linguistic characteristics to account for.

Contextual Constraints

Another limitation is Dolly 2.0’s relatively narrow token window of 2,048 tokens. This is significantly smaller than the context sizes supported by many managed language models, which can go up to 32,000 tokens or more. For use cases involving large inputs, such as long-form document summarization, Dolly 2.0 may require chunking strategies and could potentially produce subpar results due to the limited context it can process at once.

Scalability Concerns

Additionally, the current Dolly 2.0 models do not yet scale up to the 100 billion parameter range, which some applications may require to compete with the capabilities of models like ChatGPT. This size constraint could limit Dolly 2.0’s performance in certain high-stakes or mission-critical scenarios where the most powerful language models are needed.

Ongoing Limitations

Databricks has also acknowledged that, as a research-oriented model under active development, Dolly 2.0 may exhibit various other limitations. These include difficulties in handling complex prompts, open-ended question answering, proper formatting of writing tasks, code generation, mathematical operations, and maintaining a consistent sense of humor or writing style. While these shortcomings are likely to be addressed through further iterations and refinements, they represent current constraints that users should be aware of when considering Dolly 2.0 for their specific applications.

Overcoming limitations of Dolly 2.0

While open-source models like Dolly 2.0 represent important advancements, they still have significant limitations that can constrain their real-world applicability. To overcome these limitatinos, Novita AI offers a comprehensive LLM API designed to empower organizations with the flexibility and capabilities they need to build truly customized AI solutions.

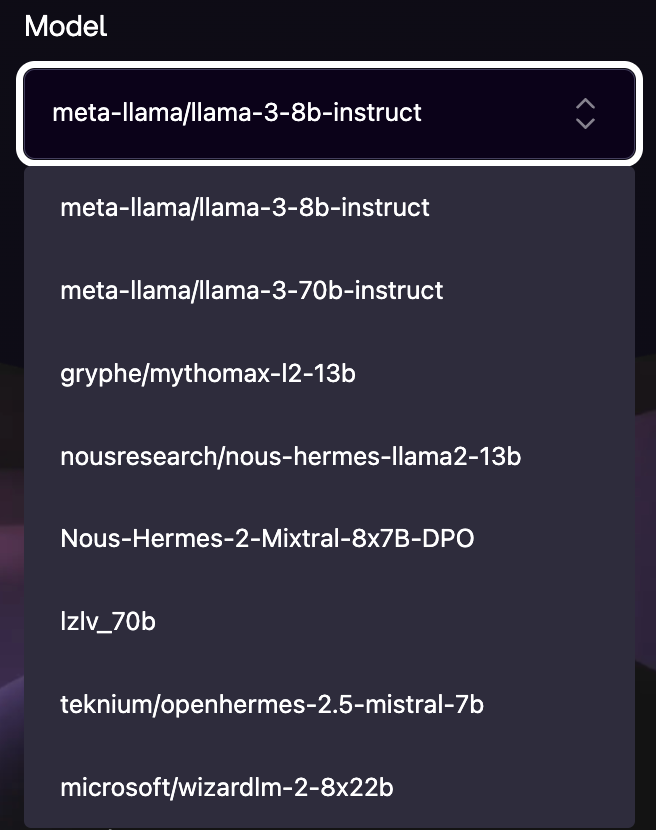

Model Variety and Customization

At the core of our LLM API is the ability to choose from a variety of large language models, not just a single pre-trained option. This means you can select the model that best aligns with your specific use case, whether that’s a multilingual variant for global applications, a higher-parameter model for mission-critical tasks, or a specialized domain-tuned version for industry-specific needs.

But model selection is just the beginning. Our API also allows you to systematically modify the tone, personality, and behavior of your chosen LLM through the use of carefully crafted prompts. By fine-tuning the model’s response patterns, you can ensure your AI assistant exhibits the exact voice, empathy, and expertise required to engage your users or customers effectively.

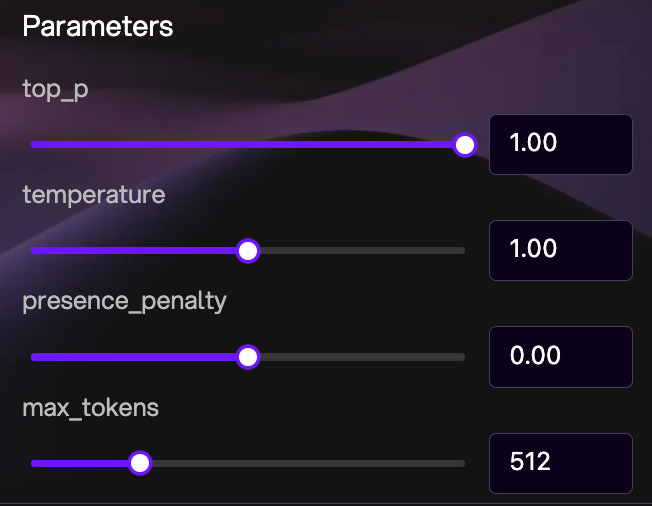

Advanced Parameter Controls

In addition to model and prompt customization, our LLM API puts granular control in your hands. You can adjust key parameters like temperature, top_p, presence_penalty, and maximum tokens to optimize the model’s outputs for your specific application requirements. This level of tailoring allows you to strike the perfect balance between creativity, coherence, and concision.

Seamless Character Integration

To further enhance the user experience, our LLM API supports the integration of custom characters that can converse with your end-users. These characters can be designed to match your brand, industry, or target audience, helping to create a more immersive and personalized interaction. By blending the power of large language models with the familiarity of a relatable character, you can build AI assistants that truly resonate with your audience.

Conclusion

While Dolly 2.0 offers a promising open-source alternative to commercially-restricted instruction-following language models, it is not without its limitations. Organizations should carefully evaluate Dolly 2.0’s capabilities and constraints in the context of their specific use cases and requirements before adopting it. To overcome the limitations of Dolly 2.0 and other open-source language models, Novita AI’s comprehensive LLM API can offer a powerful solution.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.