How to Use Llama 3.1 405b: A Comprehensive Guide

Master the art of how to use Llama 3.1 405b with our comprehensive guide. Explore tips, tricks, and tutorials on our blog.

Key Highlights

- Llama 3.1 405B, introduced in April 2024, is a multilingual large language model with 405 billion parameters, excelling in text generation, translation, and creative content creation.

- Built on a refined decoder-only Transformer architecture, it omits the Mixture-of-Experts (MoE) mechanism for improved stability and employs efficient autoregressive decoding for coherent outputs.

- The training process emphasizes high-quality, diverse data, leveraging synthetic data generation to enhance datasets, ensure privacy, and improve model performance.

- Quantization reduces weight precision (BF16 to FP8), enabling efficient, cost-effective deployment on single servers.

- Key use cases include conversational agents, multilingual translation, marketing content, and industry-specific applications in healthcare, finance, and education.

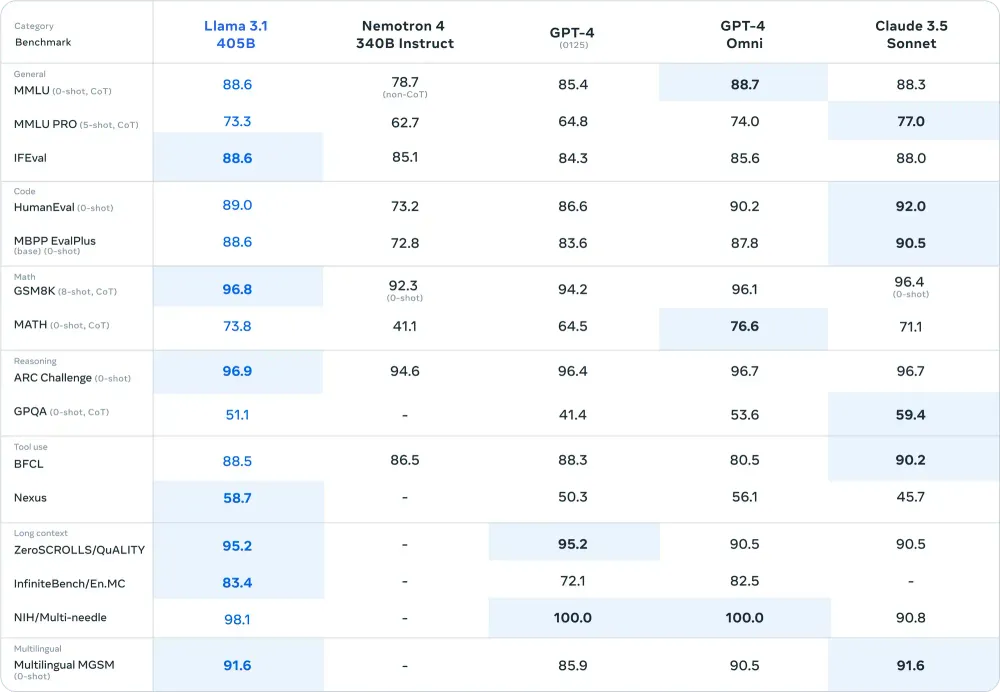

- The model outperforms competitors like GPT-4 on benchmarks such as the ARC Challenge, demonstrating superior reasoning capabilities.

- Accessible via Novita AI with APIs and an interactive chat interface for testing and integration, offering cost-effective solutions for developers and businesses.

Introduction

The world of natural language processing (NLP) is always changing. AI models, including Nvidia’s technologies, keep moving the limits of what we can do. Generative AI, especially, has grown very quickly. A key player in this is Meta AI’s Llama 3.1 405B, which is an example of artificial intelligence innovation. This guide takes a look at Llama 3.1 405B. It discusses what it can do, how it can be used, and how it is helping to shape the future of AI-driven language applications.

Understanding Llama 3.1 405b: The Basics

Llama 3.1, introduced as an update to Llama 3 in April 2024, features the flagship model Llama 3.1 405B, named for its remarkable 405 billion parameters.

What Is Meta Llama 3.1 405B?

Imagine using the Llama 3.1 405B, an AI model from the collection of multilingual large language models that understands and creates text like a human based on your questions. This advanced language model boasts an impressive 405 billion parameters, making it one of the largest available.

Llama 3.1 405B excels in comprehending complex questions, generating creative content, translating languages, and producing various types of text. It is a valuable resource for researchers, developers, and individuals seeking to leverage generative AI for chatbots, multilingual conversational agents, and synthetic data creation.

Watch “Llama 3.1 405B Deep Dive” to gain a deeper understanding of the Llama 3.1 405B model.

How Llama 3.1 405B Works?

This section delves into the technical aspects of Llama 3.1 405B, covering its architecture, training methodology, data preparation and optimization strategies.

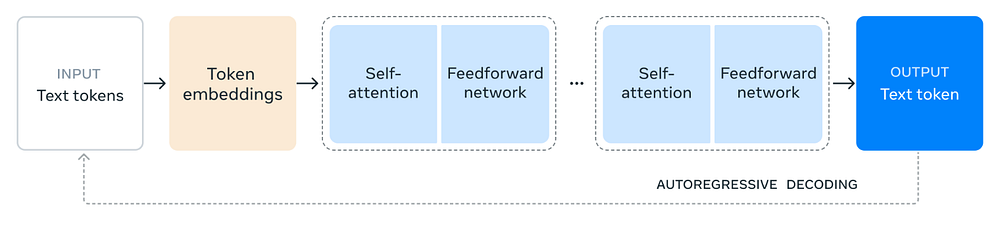

Transformer architecture with tweaks

Llama 3.1 405B employs a standard decoder-only Transformer architecture, which serves as the backbone for many successful large language models. It processes input text through multiple layers, leveraging self-attention mechanisms to understand the relationships between words and their context. This design enables the model to excel in tasks involving language understanding and generation.

While adhering to a conventional framework, Meta AI introduced targeted refinements to enhance the model’s stability and performance:

- Exclusion of Mixture-of-Experts (MoE) architecture: The complex MoE mechanism was deliberately omitted to prioritize stability and scalability during training.

- Efficient autoregressive decoding: The model generates tokens iteratively, constructing coherent language outputs based on context.

These optimizations further bolster Llama 3.1 405B’s training efficiency and task performance, making it highly effective across a wide range of natural language processing applications.

Multi-phase training process

Training data is very important for any machine learning model. Llama 3.1 405B is no different. Meta AI focused a lot on both the quality and amount of training data. For a model this size to really do well, it needs to learn from a large and varied set of texts.

To keep the data clean and useful, Meta AI took steps to remove bad information from the training set. The 405B model can also help by creating synthetic data. This means the model can make new text examples. It can add to current data or create entirely new sets of data with certain features.

This way, model and data work together nicely. It shows the thoughtful method that Meta AI used when creating Llama 3.1 405B, including rigorous safety testing influenced by Llama Guard. The AI community gains from these ongoing studies and improvements in how data is gathered and prepared.

Data quality and quantity

Meta emphasizes both the quality and quantity of the training data for Llama 3.1 405B. This effort includes a meticulous data preparation process, involving thorough filtering and cleaning to ensure the datasets are of high quality.

Notably, the 405B model is also leveraged to produce synthetic data, which is subsequently used in the training pipeline to enhance the model’s performance further.

Quantization for inference

To enhance the practicality of Llama 3.1 405B for real-world applications, Meta employed a method known as quantization. This process reduces the model’s weight precision from 16-bit (BF16) to 8-bit (FP8), akin to lowering an image’s resolution while retaining its key details.

By simplifying the model’s internal computations, quantization significantly improves its efficiency and speed, enabling it to operate smoothly on a single server. This optimization not only boosts performance but also lowers the cost and complexity of deploying the model for various use cases.

Llama 3.1 405B Use Cases

The uses of Llama 3.1 405B are wide and diverse, including tool use. It’s not just for simple chatbots. This model can understand and write human-like text. That opens many opportunities in different areas.

Llama 3.1 405B can help build advanced talking systems. It can create interesting marketing writing. It can also translate languages in a more meaningful way. Plus, it can generate creative content for fun. Let’s take a look at some cases where this strong model stands out.

Synthetic data generation

Training strong and precise AI models often needs a lot of labeled data. Getting real-world data can be costly, take much time, and sometimes cause privacy issues. This is where Llama 3.1 405B’s specific capabilities to create synthetic data become useful.

Synthetic data acts like real data and can help in different ways:

- Boosting Model Accuracy: You can use synthetic data to add to current datasets. This helps improve how other machine learning models work, especially in areas with less data.

- Privacy Preservation: Synthetic data lets developers train models with data that looks like sensitive information, without using real sensitive data. This helps keep privacy safe.

- Accelerated Software Development: Synthetic data can imitate how users interact with software. This leads to better testing and faster development.

Industry-specific solutions

Llama 3.1 405B is flexible and can be adjusted for different industries, supporting a wide range of applications. This is because it can be trained with specific data from those fields.

For example, if you train the model on a large set of medical journals and research, it becomes a specialized AI helper. This AI can understand complex medical terms and assist doctors with tasks like diagnosing and recommending treatments.

The same idea works for areas like finance, law, and education. The model’s ability to understand and create text tailored to these fields, along with the available resources, allows for the development of very special and valuable AI solutions.

Why Use Llama 3.1 405B?

- Exceptional Performance: Llama 3.1 shines with outstanding benchmark results, like its 96.9 score on the ARC Challenge, outperforming GPT-4 and Nemotron 4 and highlighting its advanced reasoning skills.

- Adaptability and Efficiency: Llama 3.1 405B is built for versatility and optimized performance, making it ideal for developers and businesses integrating AI into their workflows.

- Cost-effective: Hosting your own model on platforms like Novita AI offers a more affordable alternative to many large closed-model APIs.

How to use llama 3.1 405B on Novita AI

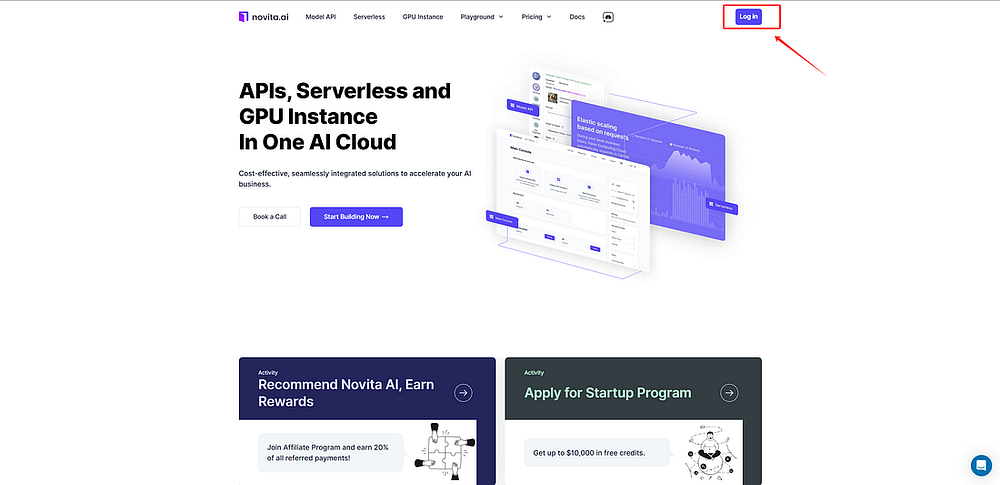

Step-by-Step Guide to Using Llama 3.1 405b on Novita AI LLM API

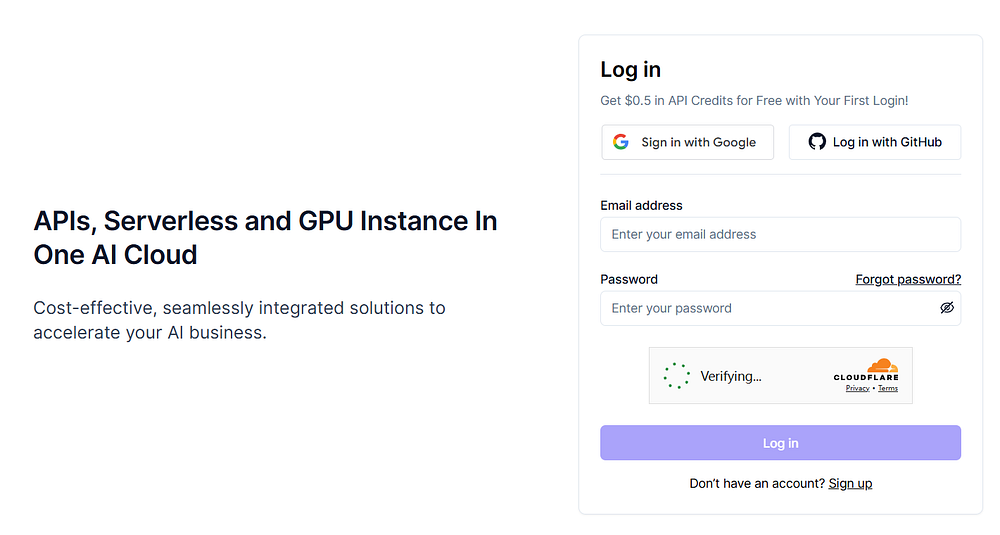

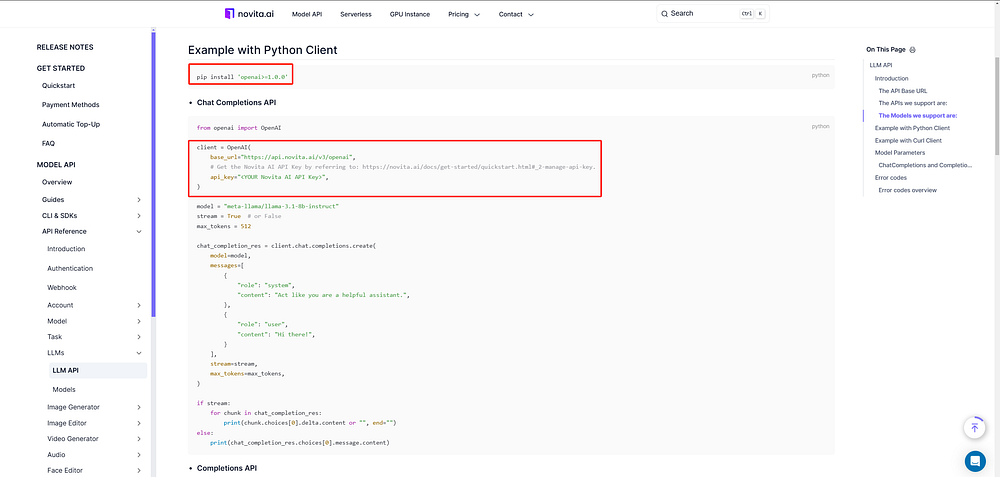

- Step 1: Sign up or Login: Create an account or log in to your Novita AI.

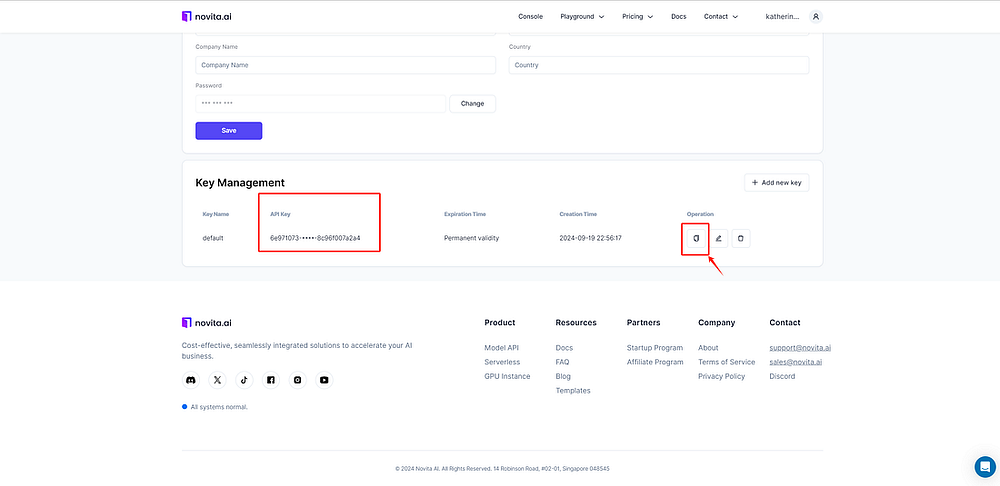

- Step 2: Obtain API Credentials: Navigate to the API Keys section and generate an API key. This key authenticates your requests.

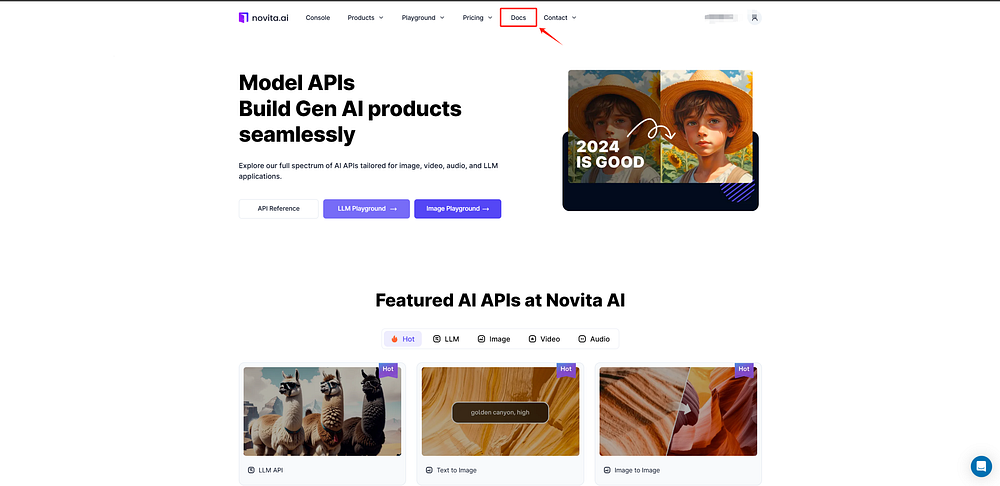

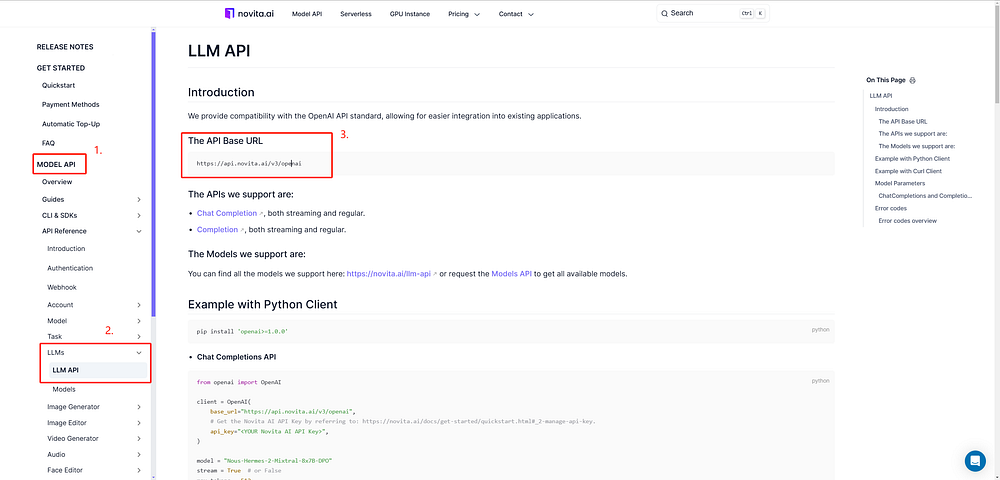

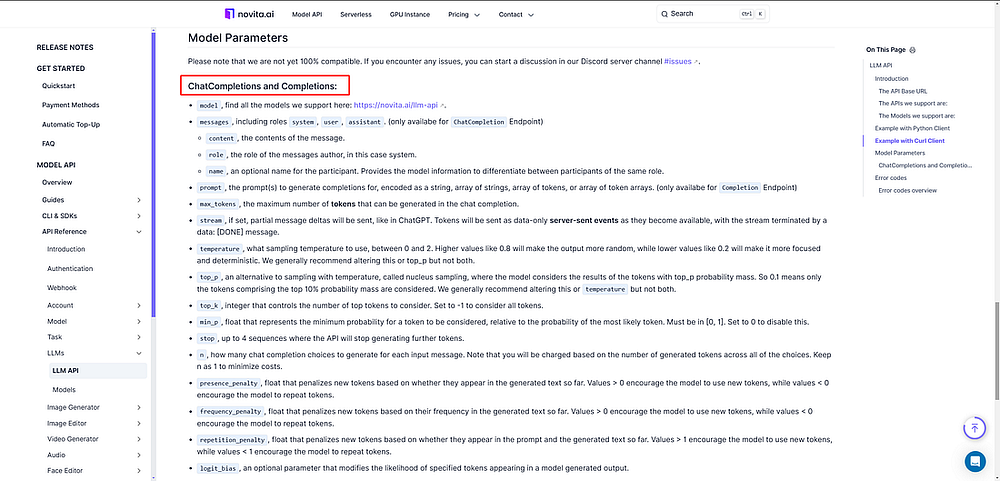

- Step 3: Explore the LLM API documentation: Navigate to “Docs” in the menu, select “Model API,” and find the LLM API section to access the API Base URL.

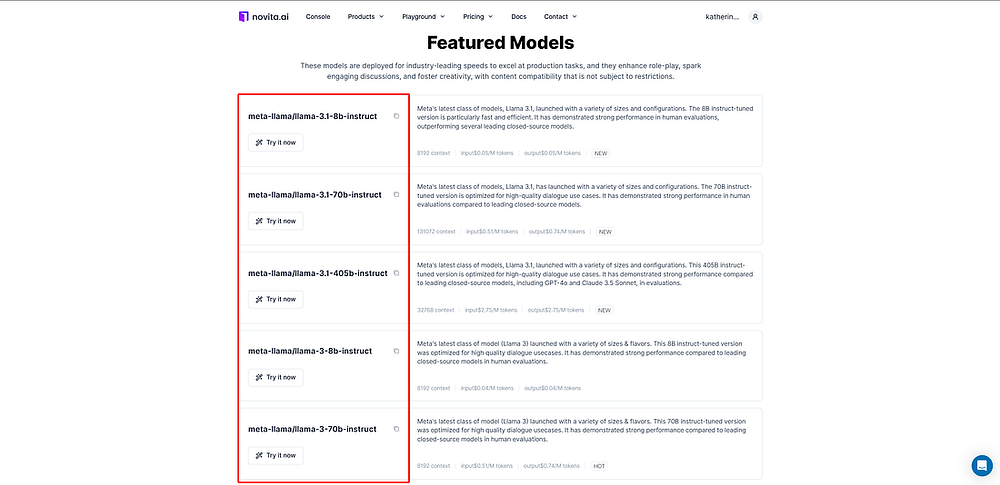

- Step 4: Select Llama 3.1 405B: Choose the Llama 3.1 405B model from the available models in the Novita AI interface, keeping in mind the total cost associated with the selection.

In addition to providing the Llama 3.1 API, we also provide apis for various other large language models.

You can find all the models we support Novita AI LLM Models List.

- Step 5: Set Parameters: Adjust parameters like temperature and max tokens to control the output’s creativity and length.

- Step 6: Make API Calls: Send your API requests, including your input prompt, to the Novita AI endpoint using your chosen library.

Use Llama 3.1 405B on Novita AI LLM Chat

For anyone looking to explore before committing to an API, Novita AI offers a user-friendly chat of Llama 3.1 405B. Simply type your prompt, hit enter, and experience its real-time, human-like text generation.

Launch the demo: Head to the “Model API” section and select “LLM API” to start exploring the LLaMA 3 and Mistral models.

Here’s what we offer for Llama 3.1

This chat is ideal for testing various applications, such as content creation or language translation, and reflects Novita AI’s commitment to making advanced AI accessible to everyone.

Whether you’re a seasoned developer or just curious about large language models, the Novita AI chat provides an engaging and insightful introduction.

Conclusion

In conclusion, learning about Llama 3.1 405b can change how you process and analyze data. It uses an advanced Transformer design and a special multi-step training method, including fine tuning techniques. This gives Llama 3.1 405b unique abilities for many different industries. By using features like quantization for inference and synthetic data generation to improve smaller models, you can enhance your projects with accuracy and speed. Whether you are an expert or just starting, adding Llama 3.1 405b to your work can take your results to the next level. Try Llama 3.1 405b on Novita AI LLM API today for a smooth and successful experience.

Frequently Asked Questions

Can I use Llama 3.1 405b for commercial projects?

Meta AI permits the use of Llama 3.1 405B for business purposes under the Open Model License Agreement, allowing developers and companies to utilize the model without additional fees.

What are the limitations of Llama 3.1 405b?

Llama 3.1 405B, a powerful AI model, has typical limitations like imprecise answers and potential biases from training data. Prompt Guard filters out harmful instructions, and responses differ based on question phrasing.

Is Llama 3.1 405B better than GPT-4o and GPT-4?

Benchmark tests show that Llama 3.1 405B performs similarly to GPT-4o and GPT-4, with strong reasoning capabilities. Determining a superior model is challenging due to performance variations across NLP tasks and measurement methods.

How much memory does Llama 3.1 need?

Running Llama 3.1 405B efficiently requires a minimum of 810 GB memory and powerful GPUs for optimal performance. Cloud solutions are preferred for deployment due to efficient power management.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.What Llama 3.1 Can Do: Mastering Its Features and Applications