How to Train Compute-Optimal Large Language Models?

Introduction

Recently, an LLM with only 70B parameters outperforms GPT 3. This LLM, called Chinchilla, was developed by Hoffmann and his colleagues. In their work, they state that current LLMs are not compute-optimal. Why is this? How do they train their so-called compute optimal LLM Chinchilla? What are the limitations of their approach and how can we overcome these limitations? In this blog, we will look at these questions one by one.

What Are Compute-Optimal Large Language Models?

The core idea behind a compute-optimal LLM is to strike the right balance between the model size (number of parameters) and the amount of training data used. This is in contrast to previous approaches that increased model size more aggressively than training data, resulting in models that were significantly undertrained relative to their capacity.

What Are the Core features of a compute-optimal LLM?

Feature 1: Balanced Scaling of Model Size and Training Data

Rather than scaling model size exponentially while only incrementally increasing the training data, compute-optimal LLMs increase both model size and training data in equal proportion. This ensures the model capacity is fully utilized by the available training data.

Feature 2: Optimization for Overall Compute Efficiency

The goal is to find the sweet spot between model size and training data that delivers the best performance-per-compute. This allows maximizing the model’s capability within a fixed computational budget, rather than simply pushing model size to new records.

Feature 3: Less Computational Resources for Fine-Tuning and Inference

This further enhances their efficiency and real-world practicality, as deploying and using the model becomes more cost-effective.

Aren’t These Popular LLMs Not Compute-Optimal?

Sadly, according Hoffmann et al. (2022), these popular LLMs are not compute-optimal. Let’s first go back to the ideas that impacted current LLMs.

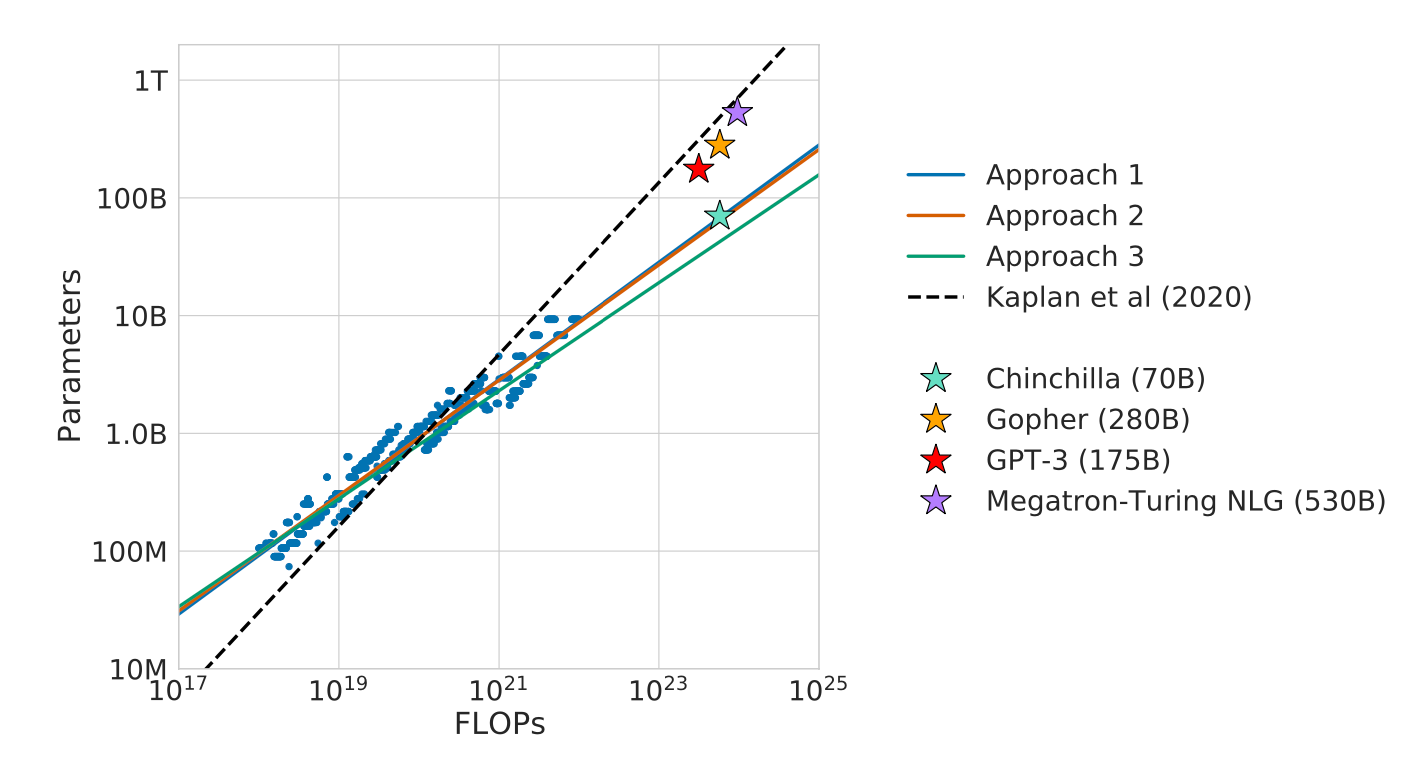

The Focus on the Model Size

Previous research by Kaplan et al. (2020) demonstrated a compelling power law relationship between language model size and performance. Specifically, they found that as the number of parameters in a model was increased exponentially, the model’s performance on various benchmarks improved at a consistent power law rate.

This seminal work has had a profound impact on the field of large language models (LLMs), leading researchers and engineers to focus heavily on scaling up model size as the primary axis of improvement. The logic was clear — if performance scales so predictably with model size, then the path to better LLMs must be to simply build bigger and bigger models.

Refocusing on the Amount of Training Data

Hoffmann et al. (2022) argue that this singular focus on model scaling has come at a significant cost. They posit that current state-of-the-art LLMs are in fact severely undertrained, with the research emphasis placed squarely on increasing model size rather than proportionally increasing the amount of training data.

This critique is a crucial contribution of their paper. The authors contend that the field has lost sight of the fundamental model-data trade-off, becoming preoccupied with pushing model size to new records without ensuring those models are trained on a commensurate amount of high-quality data. The result, they argue, is a situation where LLMs may have impressive parameter counts, but are ultimately suboptimal in their performance given the compute resources invested in their training.

By refocusing attention on this core trade-off between model capacity and training data, the authors set the stage for their empirical investigation into the truly optimal balance between these two key factors. Their findings, detailed in the following sections, offer a new paradigm for developing compute-efficient large language models.

How to Train Compute-Optimal Large Language Models?

In this section, we will dive deeper into Hoffmann et al.’s (2022) paper titled “Training Compute-Optimal Large Language Models”. As always, if research details sound too nerdy for you, just take this conclusion and skip this section: for compute-optimal training, model size and number of training tokens should be scaled equally — for every doubling of model size, the number of training tokens should also double.

Empirically Estimating the Optimal Model-Data Trade-off

To investigate the optimal trade-off between model size and training data, the authors train over 400 models ranging from 70 million to 16 billion parameters, on datasets from 5 to 500 billion tokens. They model the final pre-training loss as a function of both model size and number of training tokens.

Key Findings

The authors find that for compute-optimal training, model size and number of training tokens should be scaled equally — for every doubling of model size, the number of training tokens should also be doubled. This contrasts with the recommendations of Kaplan et al., who suggested a smaller increase in training tokens compared to model size.

Training a Compute-Optimal Model: Chinchilla

Applying their findings, the authors train a 70 billion parameter model called Chinchilla, using the same compute budget as the 280 billion parameter Gopher model. Chinchilla significantly outperforms Gopher, GPT-3, Jurassic-1, and Megatron-Turing NLG on a wide range of downstream tasks, while also requiring substantially less compute for fine-tuning and inference.

Concluding Remarks

The paper demonstrates that current large language models are significantly undertrained, and provides a principled approach to determining the optimal model size and training data for a given compute budget. This has important implications for the efficient development of future large-scale language models.

If you want to know more technical details, feel free to read the original journal article.

Limitations of the Approach of Training Compute-Optimal Large Language Models

Although the approach outlined in this article on compute-optimal large language models (LLMs) presents a compelling theoretical framework, there are a few potential limitations:

Availability of Vast Training Data

- The principles rely on having access to extremely large, high-quality datasets to train the models.

- Acquiring and curating such massive datasets can be challenging, time-consuming, and costly.

- This may limit the ability to practically implement the approach, especially for smaller research teams or organizations.

Hardware and Compute Constraints

- Training very large models with proportional amounts of data requires immense computational resources.

- Access to the necessary hardware (e.g. powerful GPUs, TPUs) and the required electricity/cooling infrastructure may be a limiting factor.

- The overall compute costs associated with this approach could be prohibitive for many.

Domain-Specific Performance

- The article focuses on general-purpose language models, but the optimal balance of model size and training data may vary for models targeting specific domains or tasks.

- Certain applications may require a different trade-off approach to achieve the best results.

Lack of Empirical Validation

- While the principles laid out are logically sound, the article does not provide empirical evidence or case studies demonstrating the efficacy of the compute-optimal approach.

- Further research and real-world implementation would be needed to validate the claims and quantify the benefits.

Potential Societal Impacts

- Scaling up model size and training data could exacerbate concerns around AI safety, security, and the environmental impact of large-scale machine learning.

- These societal implications are not addressed in the article and would require careful consideration.

Overall, practical implementation of the compute-optimal LLM approach may face significant challenges related to data, hardware, domain-specificity, and broader impact considerations. Empirical evaluation and further research would be needed to fully assess its feasibility and benefits.

An Alternative Way of Getting Better LLMs’ Performances

While the compute-optimal approach outlined earlier presents a compelling framework for developing high-performing LLMs, there is an alternative solution that can offer even greater flexibility and efficiency: LLM APIs.

Instead of relying on a single, fixed LLM, Novita AI LLM API provides access to a diverse range of language models, each with its own unique capabilities and areas of specialization. This allows users to select the most appropriate model for their specific needs.

Moreover, Novita AI Model API empowers users with the ability to easily adjust key model parameters, such as top p(governs the model’s word selection process to promote more diverse and meaningful text generation), temperature(modulates the degree of randomness and exploration in the model’s text production), max tokens (constrains the length of the model’s output) and presence penalty (penalizes the model for excessive repetition of words, incentivizing it to generate more varied text). This level of customization enables fine-tuning the LLM’s performance to match the unique requirements of each project or use case, resulting in more optimal and tailored results.

In addition to adjustable parameters, another standout features of Novita AI Model API is its support for system prompt input. Users can provide custom prompts or templates to guide the language model’s behavior, allowing for more directed and purposeful responses. This can be particularly valuable for applications that require a specific tone, style, or domain-specific knowledge.

Conclusion

The work by Hoffmann et al. represents a significant step towards optimizing the training of large language models within practical computational constraints. Their core idea of balancing model capacity and training data scale is both theoretically grounded and empirically validated through their Chinchilla model. By avoiding the pitfalls of severe undertraining, this compute-optimal approach unlocks new levels of performance and efficiency compared to prior state-of-the-art LLMs like GPT-3.

However, implementing such compute-optimal training at scale is not without challenges. Curating the staggeringly large high-quality datasets required poses difficulties. Availability of sufficient computational resources, from hardware to energy costs, may also hamper adoption — especially for smaller organizations. An alternative approach that provides more flexibility is to leverage advanced language model APIs like Novita AI Model API. These APIs give users access to a diverse range of pretrained models tailored for different use cases.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.

Recommended Reading

What Will Happen When Large Language Models Encode Clinical Knowledge?

How Can Large Language Models Self-Improve?