How Much RAM Memory Does Llama 3.1 70B Use?

Explore the RAM requirements of Llama 3.1 70B, its hardware needs, and optimization techniques. Learn how to efficiently deploy this powerful language model for AI applications.

The Llama 3.1 70B model, a cutting-edge language model in the AI landscape, has garnered significant attention for its impressive capabilities. However, with great power comes substantial hardware requirements, particularly in terms of RAM usage.

This article delves into the specifics of Llama 3.1 70B’s memory consumption, hardware needs, and optimization strategies. Whether you’re a developer looking to implement this model or an AI enthusiast curious about its technical aspects, this comprehensive guide will provide valuable insights into efficiently utilizing Llama 3.1 70B.

Table of Contents

- How Much Memory Does Llama 3.1 Require

- Hardware Specifications for Optimal Performance

- GPU Considerations for Llama 3.1 70B

- Optimizing Memory Usage: Techniques and Strategies

- How to Run Llama 3.1 with Novita AI

How Much Memory Does Llama 3.1 Require?

Llama 3.1 introduces exciting advancements, but running it necessitates careful consideration of your hardware resources. We have detailed the memory requirements for both training and inference across the three model sizes.

Inference Memory Requirements

For inference, the memory requirements vary based on the model size and the precision of the weights. Below is a table showing the approximate memory needed for different configurations:

| Model Size | FP16 | FP8 | INT4 |

|---|---|---|---|

| 8B | 16 GB | 8 GB | 4 GB |

| 70B | 140 GB | 70 GB | 35 GB |

| 405B | 810 GB | 405 GB | 203 GB |

Note: The above numbers indicate the GPU VRAM required just to load the model checkpoint. They do not include the torch reserved space for kernels or CUDA graphs.

For example, an H100 node (with 8x H100) has approximately 640 GB of VRAM, so the 405B model would need to be run in a multi-node setup or at a lower precision (e.g., FP8), which is the recommended approach.

Keep in mind that lower precision (e.g., INT4) may result in some loss of accuracy but can significantly reduce memory requirements and increase inference speed. In addition to the model weights, you will also need to keep the KV Cache in memory. It contains keys and values of all the tokens in the model’s context such that they don’t need to be recomputed when generating a new token. Especially when making use of the long available context length, it becomes a significant factor. In FP16, the KV cache memory requirements are:

| Model Size | 1k tokens | 16k tokens | 128k tokens |

|---|---|---|---|

| 8B | 0.125 GB | 1.95 GB | 15.62 GB |

| 70B | 0.313 GB | 4.88 GB | 39.06 GB |

| 405B | 0.984 GB | 15.38 GB | 123.05 GB |

Especially for the small model, the cache uses as much memory as the weights when approaching the context length maximum.

Training Memory Requirements

The following table outlines the approximate memory requirements for training Llama 3.1 models using different techniques:

| Model Size | Full Fine-tuning | LoRA | Q-LoRA |

|---|---|---|---|

| 8B | 60 GB | 16 GB | 6 GB |

| 70B | 500 GB | 160 GB | 48 GB |

| 405B | 3.25 TB | 950 GB | 250 GB |

Note: These are estimated values and may vary based on specific implementation details and optimizations.

Factors Affecting RAM Usage

Several factors can significantly impact the RAM usage of Llama 3.1 70B:

Batch Size: Larger batch sizes require more memory because more data needs to be processed simultaneously. Reducing the batch size can help decrease memory usage.

Model Precision: The precision of the model weights (such as using 32-bit floating point vs. 16-bit floating point or 8-bit precision) can also impact memory usage.

Hardware Configuration: The type of hardware used for inference (e.g., GPU vs. CPU) plays a significant role in how much memory is required. For large models, GPUs with high memory bandwidth are commonly used due to their ability to handle parallel processing efficiently.

Distributed Setup: With distributed computing, the model is divided across multiple devices, reducing the memory burden on any single machine.

Hardware Specifications for Optimal Performance

To harness the full potential of Llama 3.1 70B, specific hardware configurations are recommended. Let's break down the key components and their requirements.

RAM Specifications

As discussed earlier, the base memory requirement for Llama 3.1 70B exceeds 140GB. However, for smooth operation and to account for additional memory needs, a system with at least 256GB of RAM is recommended. This provides ample headroom for:

- Loading the model

- Handling large input sequences

- Performing intermediate computations

- Managing output generation

For production environments or research settings where multiple instances of the model might be run simultaneously, systems with 512GB or even 1TB of RAM are not uncommon.

CPU Requirements

While GPUs handle most of the heavy lifting in AI computations, a powerful CPU is still crucial for:

- Data preprocessing

- Managing model loading and unloading

- Handling I/O operations

- Coordinating multi-GPU setups

For optimal performance, consider high-end server-grade CPUs with:

- Multiple cores (32+ cores)

- High clock speeds (3.0+ GHz)

- Large cache sizes

Intel Xeon or AMD EPYC processors are popular choices for systems running large language models like Llama 3.1 70B.

Storage Considerations

Fast storage is essential for quick model loading and efficient data handling. Recommendations include:

- NVMe SSDs with capacities of 1TB or more

- RAID configurations for improved I/O performance

- High-speed network storage solutions for distributed setups

The model itself, including all necessary files and potential fine-tuned versions, can occupy several hundred gigabytes of storage space.

Cooling and Power Supply

Running Llama 3.1 70B generates significant heat and requires substantial power. Ensure your setup includes:

- Efficient cooling systems (liquid cooling for GPUs is often preferred)

- High-wattage power supplies (1200W or higher, depending on the full system configuration)

- Proper ventilation for the entire system

Network Infrastructure

For distributed computing setups or when serving the model through APIs, consider:

- High-speed network interfaces (10 Gbps Ethernet or higher)

- Low-latency network switches

- Sufficient bandwidth for data transfer and model serving

By meeting these hardware specifications, you can ensure that Llama 3.1 70B operates at its full potential, delivering optimal performance for your AI applications.

GPU Considerations for Llama 3.1 70B

Graphics Processing Units (GPUs) play a crucial role in the efficient operation of large language models like Llama 3.1 70B. Their parallel processing capabilities significantly accelerate computations, making them indispensable for both training and inference tasks.

VRAM Requirements

The VRAM (Video RAM) on GPUs is a critical factor when working with Llama 3.1 70B. The model's enormous size means that standard consumer GPUs are insufficient for running it at full precision. Here's a breakdown of VRAM considerations:

-

Minimum VRAM: To load the full model in FP16 precision (which halves the memory requirement compared to FP32), you would need at least 140GB of VRAM. This exceeds the capacity of even the most powerful consumer GPUs.

-

Recommended VRAM: For optimal performance and to accommodate additional memory needs during processing, a total VRAM of 200GB or more is ideal.

-

Multi-GPU Setups: Due to these high requirements, multi-GPU configurations are common. For example, a setup with 4 x 48GB GPUs (totaling 192GB of VRAM) could potentially handle the model efficiently.

Suitable GPU Models

Several high-end GPU models are capable of running Llama 3.1 70B, either individually or in multi-GPU configurations:

-

NVIDIA A100: With 80GB of HBM2e memory, this is one of the few single GPUs that can handle the model, albeit with some optimizations.

-

NVIDIA A40: Offering 48GB of GDDR6 memory, these are often used in multi-GPU setups.

-

NVIDIA H100: The latest in NVIDIA's data center GPU lineup, providing 80GB of HBM3 memory and enhanced AI performance.

-

AMD Instinct MI250: With 128GB of HBM2e memory, this GPU can potentially run the model on a single card, though software compatibility should be verified.

GPU Memory Bandwidth

Apart from raw VRAM capacity, memory bandwidth is crucial for efficient model operation. The aforementioned GPUs offer high memory bandwidths:

- A100: Up to 2,039 GB/s

- H100: Up to 3,350 GB/s

- MI250: Up to 3,276 GB/s

Higher bandwidth allows for faster data transfer between GPU memory and processing units, which is essential for the complex operations involved in running Llama 3.1 70B.

Optimization Techniques for GPUs

To maximize GPU utilization and potentially run the model on systems with less VRAM, several techniques can be employed:

-

Mixed Precision Training: Using a combination of FP16 and FP32 computations can reduce memory usage while maintaining accuracy.

-

Gradient Checkpointing: This technique trades computation for memory by recomputing certain values during the backward pass instead of storing them.

-

Model Parallelism: Distributing the model across multiple GPUs allows for running larger models than what a single GPU's memory can accommodate.

-

Attention Optimizations: Implementing efficient attention mechanisms can significantly reduce memory usage and computation time.

-

Quantization: Converting the model to lower precision formats (like INT8) can dramatically reduce memory requirements, though potentially at the cost of some accuracy.

By leveraging these GPU considerations and optimization techniques, it's possible to run Llama 3.1 70B efficiently, even on hardware setups that might initially seem insufficient. The key lies in balancing the trade-offs between performance, accuracy, and resource utilization.

For developers looking to implement Llama 3.1 70B or other large language models in their projects, Novita AI's Quick Start guide provides comprehensive instructions on setting up and optimizing LLM APIs, ensuring efficient utilization of available hardware resources.

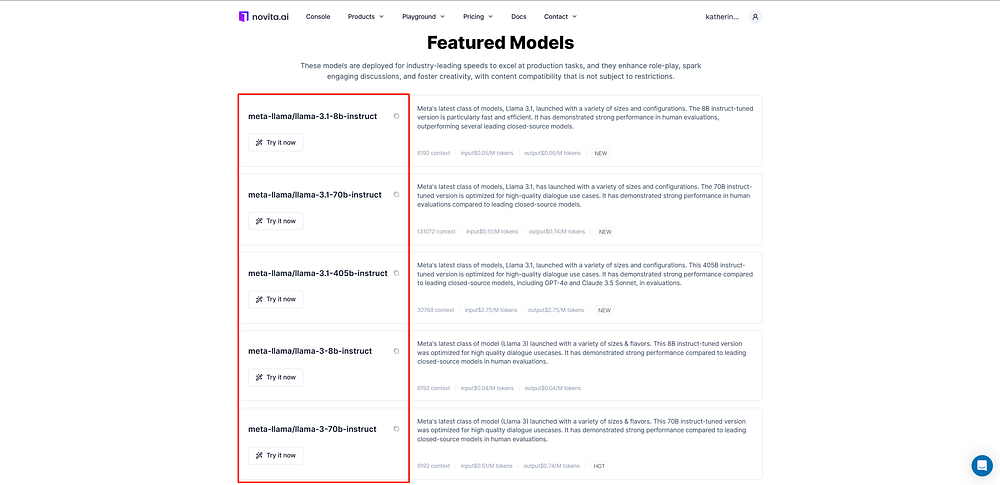

How to Run Llama 3.1 with Novita AI

Whether you are building an AI-powered customer service chatbot, a smart language translation tool, or a resume editing tool, Novita AI’s API makes integration simple. This allows developers to focus on their main tasks while utilizing all the features of Llama 3.1, without worrying about the complexities of managing the system.

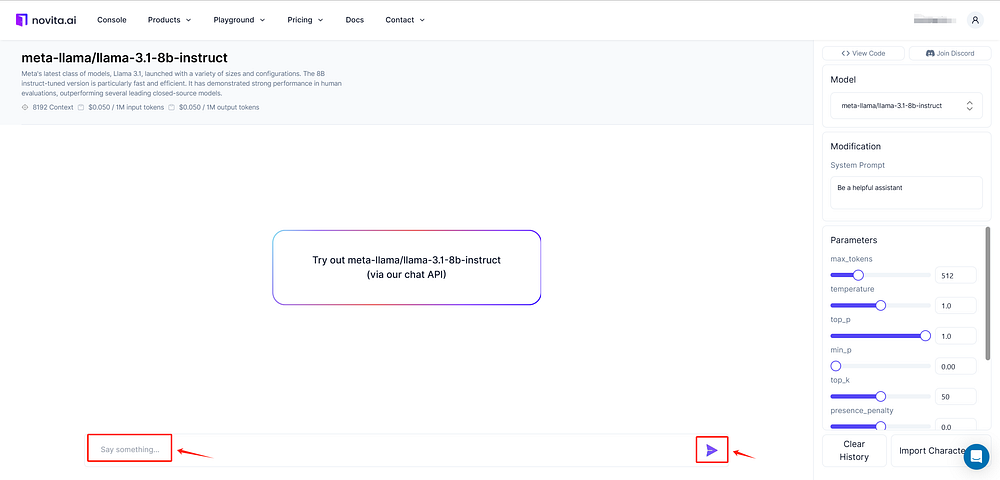

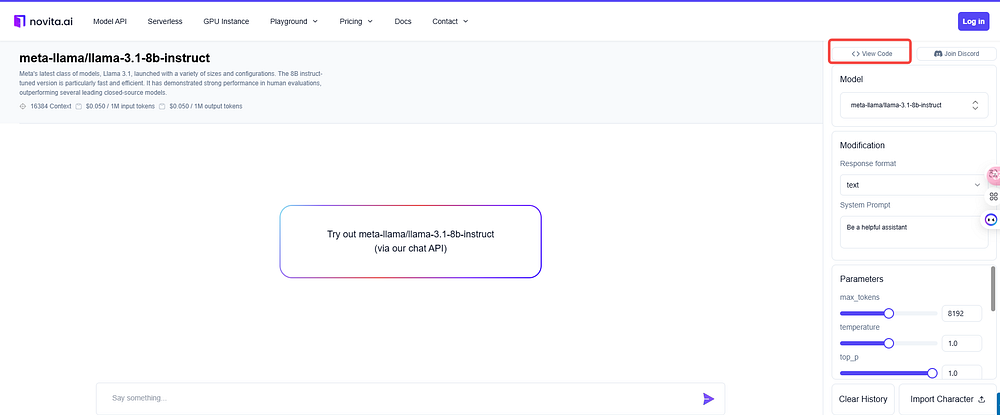

Before you officially integrate the Llama 3.1 API, you can give it a try online with Novita AI. Here’s how to get started with Novita AI’s Llama online:

Step 1: Select the Llama model that is desired for utilization and assess its capabilities.

Step 2: Enter the desired prompt into the designated field. This area is intended for the text or question to be addressed by the model.

Step 3: Get the model response for the given chat conversation.

API Reference Sample

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring: /docs/get-started/quickstart.htmll#_3-create-an-api-key

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3.1-8b-instruct"

stream = True # or False

max_tokens = 8192

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)

Frequently Asked Questions

How much RAM is needed to run Llama 3.1 70B?

Running Llama 3.1 70B typically requires 64 GB to 128 GB of system RAM for inference, depending on factors such as batch size and model implementation specifics.

How much memory does Llama 2 70B need?

Llama 2 70B generally requires a similar amount of system RAM as Llama 3.1 70B, with typical needs ranging from 64 GB to 128 GB for effective inference.

How much space does Llama 3.1 take?

Llama 3.1 requires significant storage space, potentially several hundred gigabytes, to accommodate the model files and any additional resources necessary for operation.

How much VRAM is needed to run Llama 3.1 8B?

For Llama 3.1 8B, a smaller variant of the model, you can typically expect to need significantly less VRAM compared to the 70B version, but it still depends on the specific implementation and precision used.

How is 32GB RAM considered for running Llama models?

32GB of RAM is generally insufficient for running large models like Llama 3.1 70B. However, it might be suitable for smaller versions or highly optimized setups.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

1.Llama 3.1 405B Inference Service Deployment: Beginner's Guide

2.Decoding Llama 3 vs 3.1: Which One Is Right for You?

3.What Llama 3.1 Can Do: Mastering Its Features and Applications