Enhance Your Projects with Llama 3.1 API Integration

Key Highlights

- Llama 3.1 API offers seamless integration of advanced AI capabilities.

- Access state-of-the-art language model functionalities for enhanced applications.

- Available on platforms like Replicate for easy implementation.

- Leverage AI for text generation, complex query responses, and more.

- Streamline development processes and unlock new possibilities with AI.

Introduction

Llama 3.1, developed by Meta, is a major advancement in AI with enhanced natural language processing capabilities across languages. This technology features a robust API for easy integration into diverse applications, from chatbots to content generation systems. Businesses can leverage Llama 3.1 to boost communication and productivity with AI-driven solutions that streamline processes and enhance user experiences in the dynamic AI landscape.

Understanding the Llama 3.1 Model

Llama 3.1 represents a big step forward in language model technology. It is trained on a large dataset and uses smart techniques like model distillation. These help it perform really well on many benchmark datasets. This clever model can understand and create written text that sounds like it was made by a person. This makes it great for many uses.

Also, Llama 3.1 is built to handle complex language and its details. It does well in tasks that need a good understanding of context and language. Its skill in giving relevant answers makes it a strong tool for developers who want to create advanced AI-powered solutions.

Key Features of Meta Llama 3.1

- Largest Model Size: Llama 3.1 is the largest open-source AI model, featuring 405 billion parameters. This allows for deeper understanding and processing of complex information.

- Multi-Language Support: The model supports eight languages, including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai, making it highly versatile for global applications.

- Increased Context Length: Llama 3.1 can handle contexts up to 128K tokens, which is particularly useful for long-form content generation and complex problem-solving.

- Faster Response Time: With improved algorithms, Llama 3.1 responds 35% faster than its predecessors, enhancing usability and efficiency.

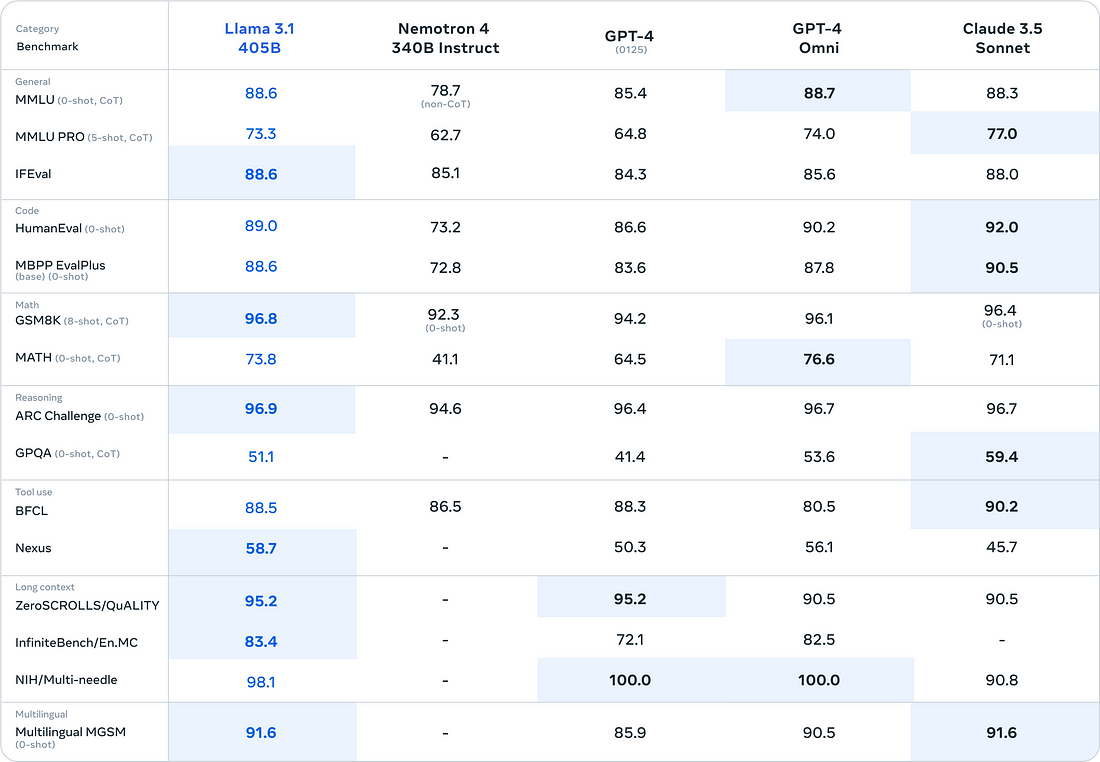

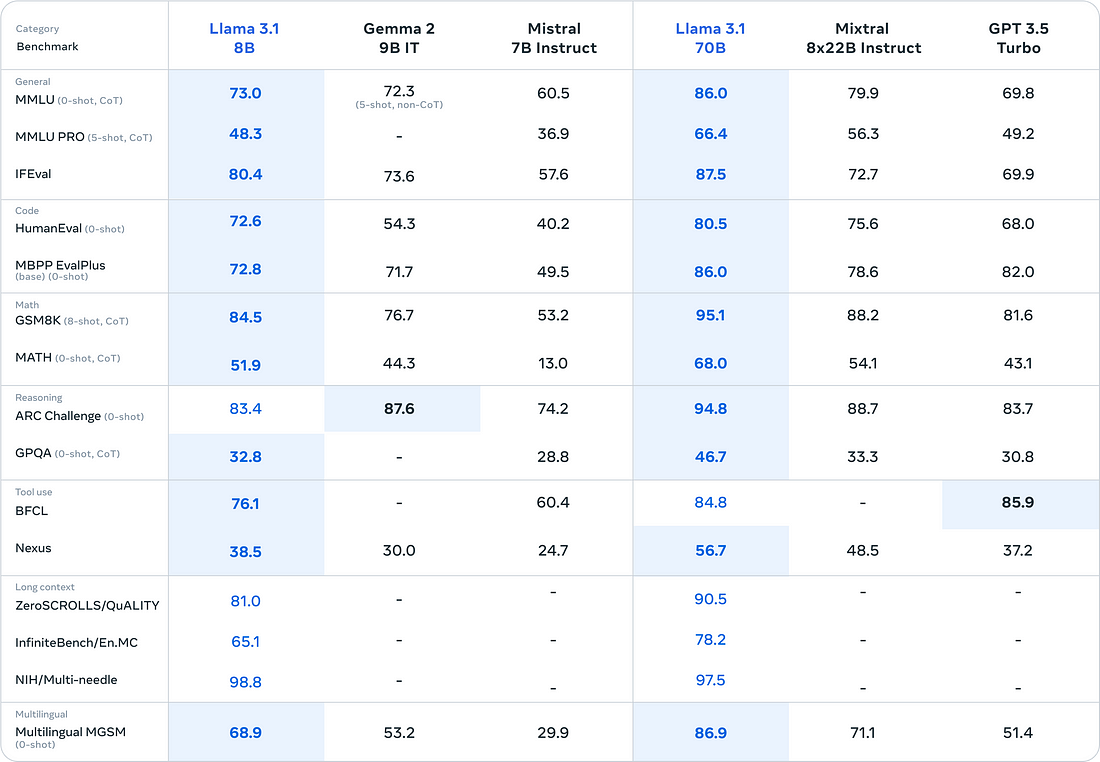

Model evaluations

What is Llama 3.1 API?

The Llama 3.1 API is built on the strong Llama Stack API. It helps developers to easily add Llama 3.1’s features into their apps. With this API, you can use pre-trained Llama 3.1 models without worrying about the hard parts of model training and deployment.

Using the API is simple. It works with easy requests and responses. This makes it easy for developers to include Llama 3.1’s abilities in their work. You can use it for creative text, translating languages, or getting helpful answers. The Llama 3.1 API gives developers a flexible and scalable solution for their AI needs.

Exploring the Capabilities of Llama 3.1 API

- Improved Data Handling: Llama 3.1 allows developers to manage large data sets more efficiently, reducing load times and improving response rates.

- Enhanced Security Protocols: With cyber threats on the rise, Llama 3.1 has upgraded its security measures to ensure robust data protection.

- User-friendly Design: Even for those with limited coding experience, this API offers a straightforward interface that eases the learning curve.

- Integration Capabilities: Seamlessly integrates with existing systems, allowing for more flexible software development and deployment.

Why use the llama 3.1 API?

The Llama 3.1 API is a great option for developers who want to add language processing features to their projects. It is easy to use and offers many good functions. This makes it a popular choice for a wide range of applications.

The API makes things simpler by removing the need for complex model training and management. This helps developers focus on creating new and impactful solutions. Whether it is for content creation, improving chatbots, or exploring new areas in AI, the Llama 3.1 API gives developers access to powerful tools.

What are the benefits of using the llama 3.1 API?

Building on a strong foundation, the Llama 3.1 API offers many benefits:

- High-Quality Text Generation: The Llama 3.1 creates clear, relevant, and human-like text, backed by a lot of human evaluations.

- Time and Resource Efficiency: Developers can save time and effort by using the pre-trained Llama 3.1 model, avoiding the hard work of training their own models.

- Scalability and Flexibility: The API easily scales to fit different project needs. It works well for small applications and large deployments, giving developers a flexible option.

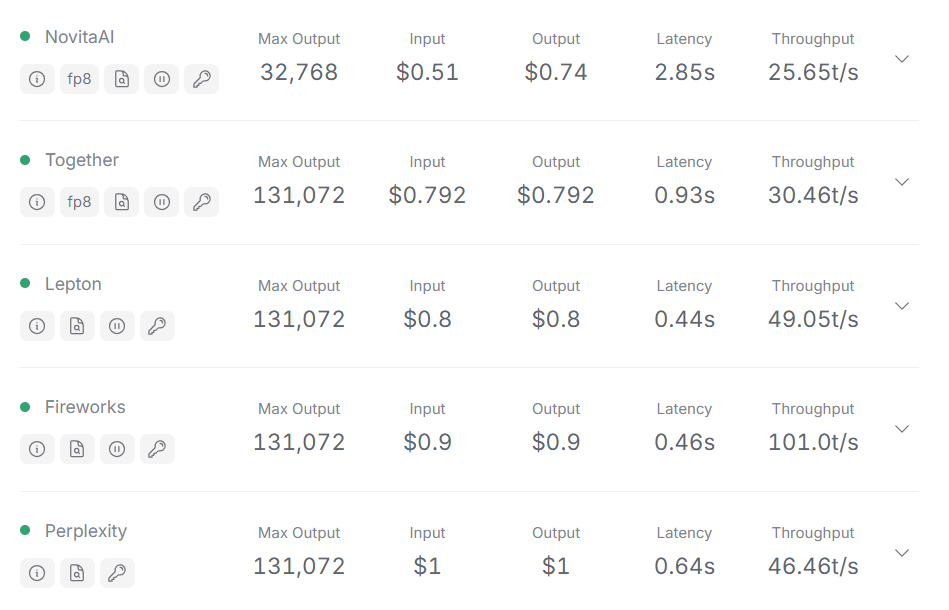

Llama API Pricing

Understanding the pricing structure of the Llama 3.1 API empowers developers to make informed decisions regarding its integration. Typically, Meta offers flexible pricing tiers based on factors like the number of API calls, the volume of tokens processed, and the complexity of the chosen model (8B, 70B, or 405B parameters).

Llama API documentation

Hardware and Software

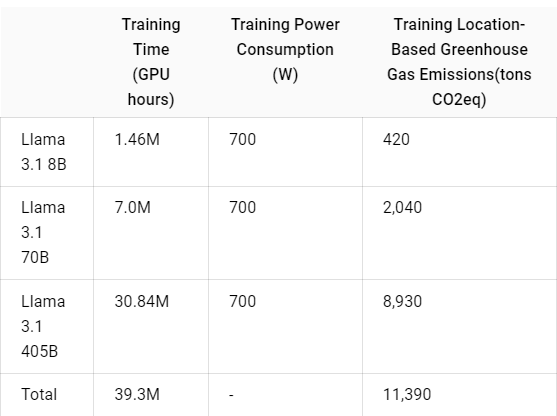

Training Factors We used custom training libraries, Meta’s custom built GPU cluster, and production infrastructure for pretraining. Fine-tuning, annotation, and evaluation were also performed on production infrastructure.

Training Energy Use Training utilized a cumulative of 39.3M GPU hours of computation on H100–80GB (TDP of 700W) type hardware, per the table below. Training time is the total GPU time required for training each model and power consumption is the peak power capacity per GPU device used, adjusted for power usage efficiency.

How to Integrate and Use the Llama 3.1 API

Follow these organized steps precisely to create robust language processing applications with the Llama 3.1 API on Novita AI. This comprehensive manual guarantees a seamless and effective procedure, aligning with the standards of contemporary developers seeking an advanced AI platform.

- Step1: Register and Log In to Novita AI

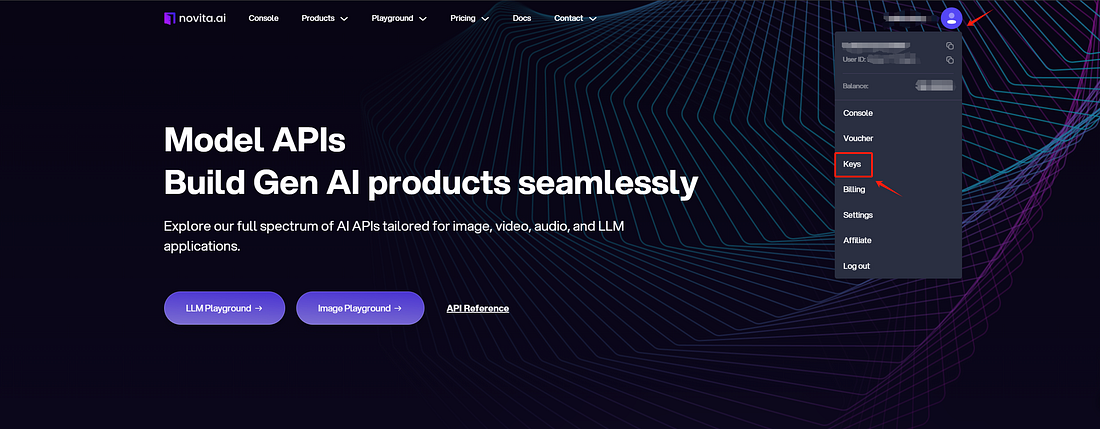

- Step2: Access the Dashboard tab at Novita AI to secure your API key. You have the option to generate your own key.

- Step3: Once you navigate to the Manage keys page, simply click copy to directly retrieve your key.

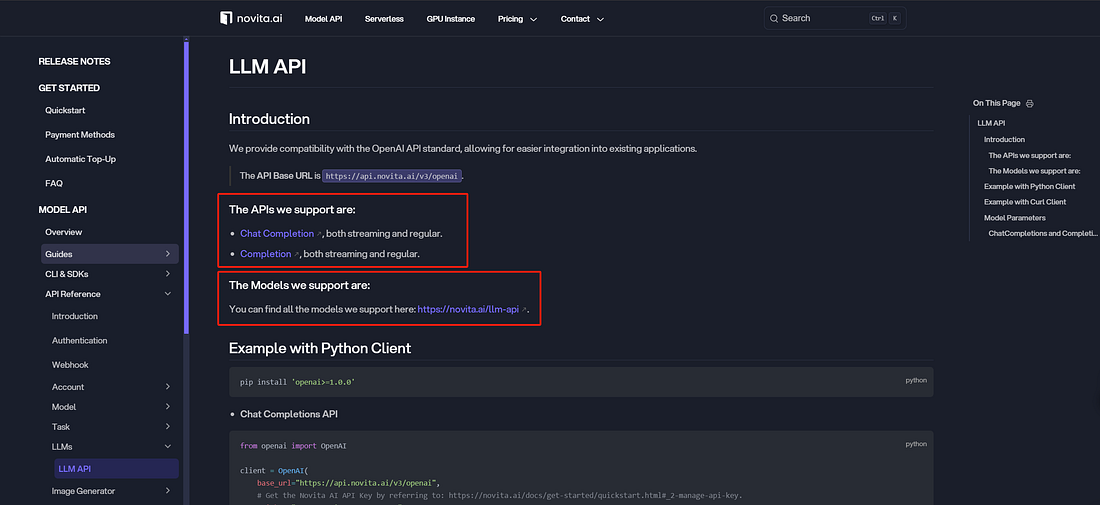

- Step4: Visit the LLM API reference page to explore the “APIs” and “Models” available through Novita AI.

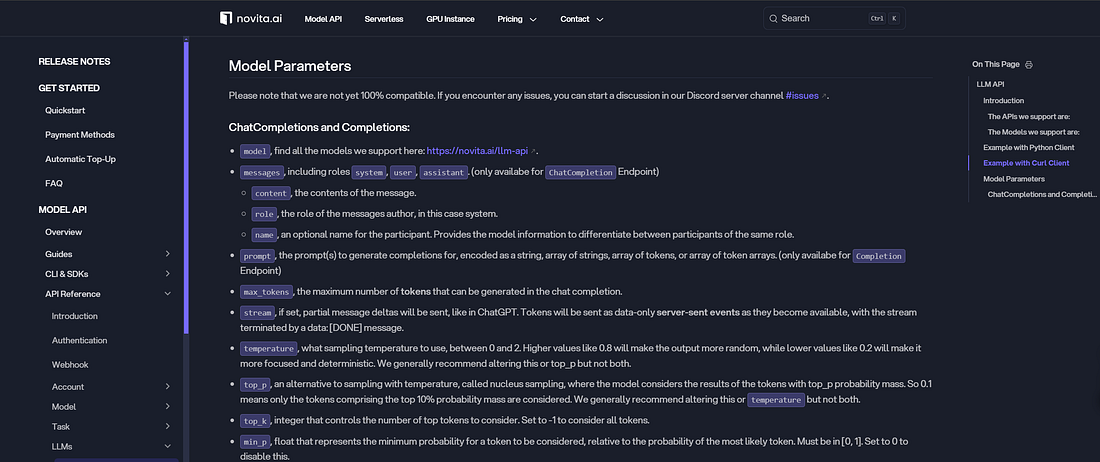

- Step5: Select the model that fits your needs. Configure your development setup and tweak parameters such as content, role, name, and detailed prompt.

- Step6: Run multiple tests to ensure the API performs reliably.

Feel free to try out the AI study notes transcription summary in the Novita AI LLM Playground:

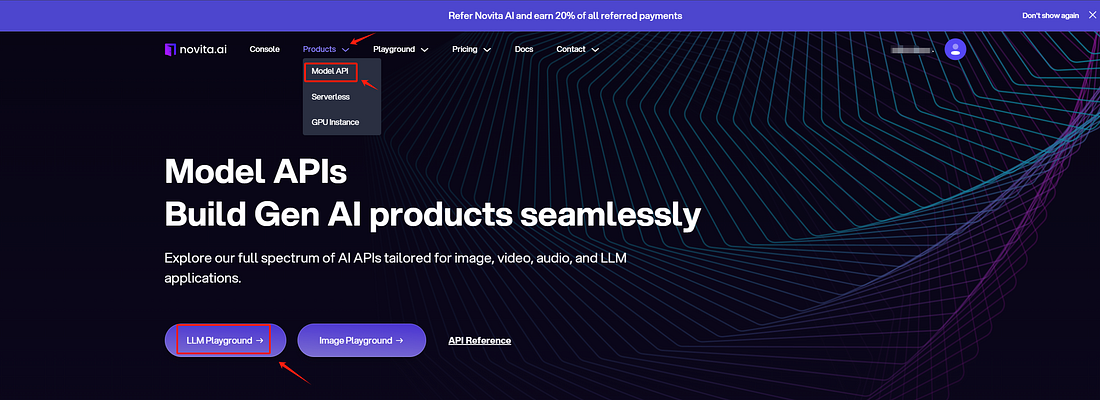

- Step1: Navigate to the Playground: Click on the Products tab in the menu, choose the Model API, and start exploring by selecting the LLM API.

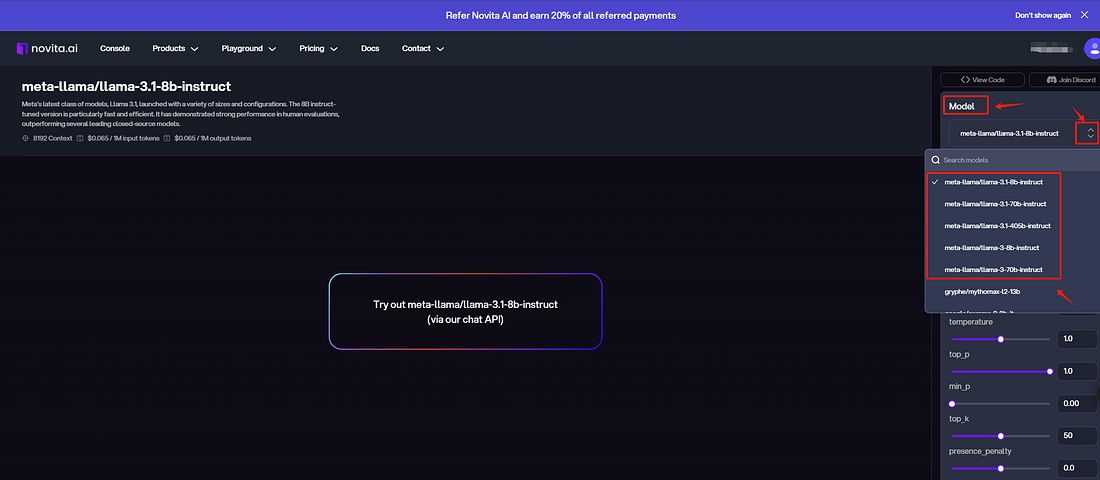

- Step2: Explore Different Models: Pick the llama model you wish to use and evaluate.

- Step3: Input Prompt and Produce Results: Type your desired prompt into the specified field. This space is designated for the text or question you want the model to address.

Llama 3.1 API on Python

Python users can especially benefit from the simplicity of using the Llama 3.1 API. Here’s a quick example of how to interact with the API using Python:

import replicate client = replicate.Client(token="your_API_token") response = client.models.get("meta/meta-llama-3–70b-instruct").predict(prompt="Write a poem about AI") print(response)Llama 3.1 API Example

To get started, you can use Llama 3.1 70B Instruct via API like this:

import requests import json response = requests.post( url="https://openrouter.ai/api/v1/chat/completions", headers={ "Authorization": f"Bearer {OPENROUTER_API_KEY}", "HTTP-Referer": f"{YOUR_SITE_URL}", # Optional, for including your app on openrouter.ai rankings. "X-Title": f"{YOUR_APP_NAME}", # Optional. Shows in rankings on openrouter.ai. }, data=json.dumps({ "model": "meta-llama/llama-3.1–70b-instruct", # Optional "messages": [ { "role": "user", "content": "What is the meaning of life?" } ] }) )Llama 3.1 API Updates

It is essential to stay informed about any upcoming updates or maintenance that could impact your utilization of the Llama 3.1 API. Timely updates can bring about new features or critical security patches, thereby improving the functionality and security of your applications. By keeping abreast of these developments, you can ensure that your systems remain optimized and protected against emerging threats. Additionally, being proactive in implementing updates can help prevent potential issues and ensure a seamless user experience for your audience.

Conclusion

The Llama 3.1 API is a robust resource for developers looking to integrate advanced language processing capabilities into their applications seamlessly. With regular updates, this API ensures state-of-the-art functionality and security, enhancing the development of innovative AI solutions. Its user-friendly interface and detailed documentation empower developers to maximize its sophisticated language processing features for their projects.

Frequently Asked Questions

Can Llama 3.1 API Enhance Existing Development Processes?

The Llama 3.1 API boosts development by providing advanced natural language processing and seamless integration across applications, enhancing tasks from sentiment analysis to image recognition.

Is it Safe & Secure to Use Llama 3.1 API?

Using the Llama 3.1 API is safe and secure with Meta’s robust safety measures like Llama Guard and Prompt Guard for content moderation and protection against malicious prompts.

Is llama 3.1 better than gpt 4?

Llama 3.1, crafted by Meta, is engineered for efficiency and optimized performance, achieving superior results with fewer parameters than GPT-4.

Is Llama 3.1 better than claude?

Llama 3.1 405B stands out for its flexibility, cost-effectiveness, and open-source nature.

Originally published at Novita AI

Novita AI is your all-in-one cloud platform tailored to support your AI ambitions. Offering integrated APIs, serverless computing, and GPU instances, we deliver affordable tools crucial for your success. Streamline your infrastructure requirements and kickstart at no cost — easily and efficiently turning your AI dreams into reality.