Can Large Language Models Transform Computational Social Science?

Introduction

Can large language models transform computational social science? Wait a second, what does computational social science do? Welcome to the dynamic field of computational social science (CSS), where large language models (LLMs) are revolutionizing the way we analyze and interpret social behaviors, opinions, and trends.

In this blog, centering around LLMs in CSS, we will explore the role of computational social science, the alignment of LLMs’ capabilities with the requirements of CSS tasks, LLMs’ performance in real CSS tasks and future directions of LLMs in CSS. Moreover, you can also learn about how to use LLM APIs for your own computational social science project. If you are interested, keep reading!

What’s the Role of Computatioanl Social Science?

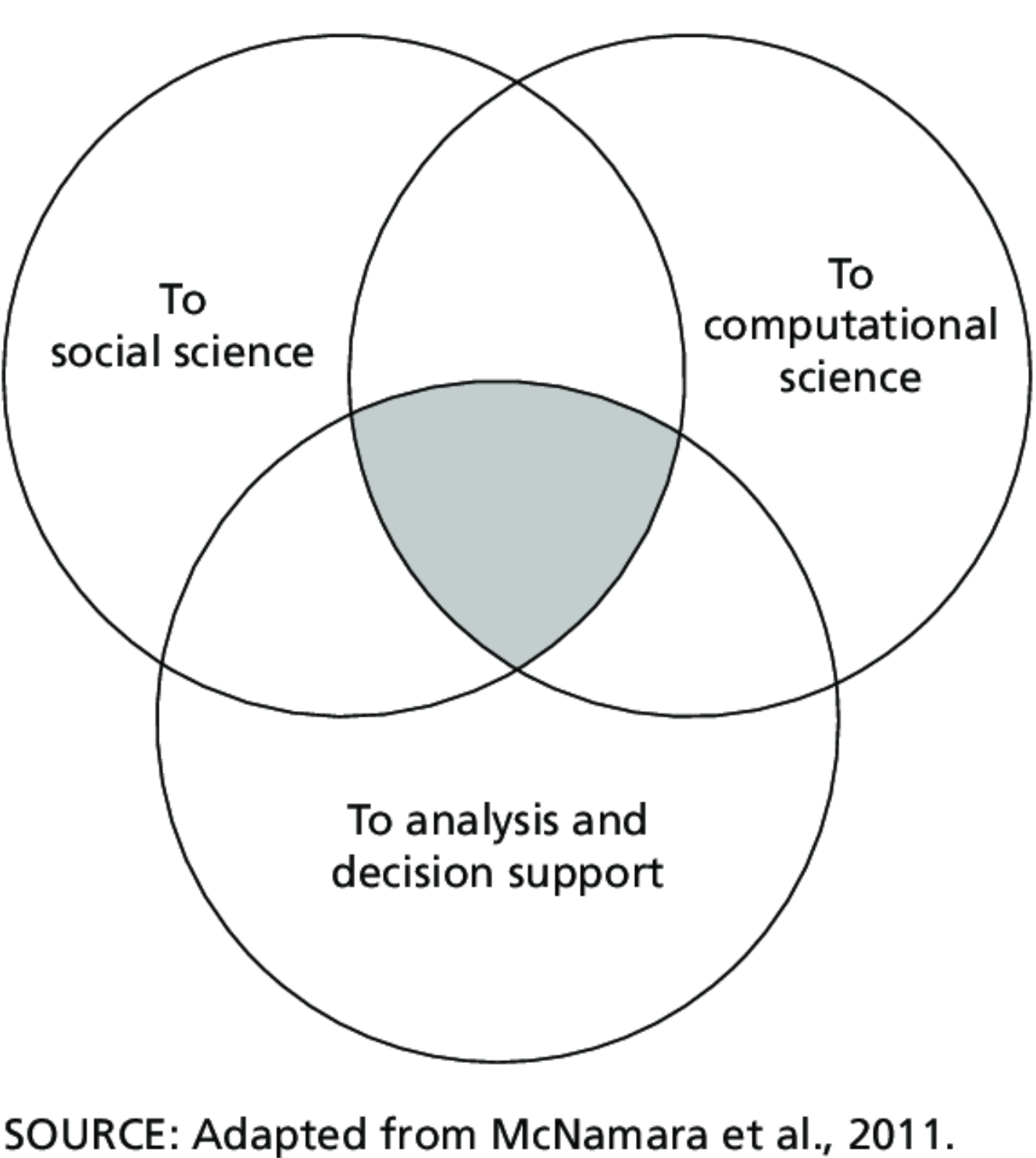

Imagine a world where computers help us understand complex social issues, like what makes a meme spread like wildfire, or how public opinions shift during elections. That’s the realm of computational social science (CSS). By harnessing the vast computing power available today, CSS explores the massive volumes of data we generate every day on social media, blogs, and other online platforms to study human behavior and societal trends in a detailed and scientific manner.

For example, CSS can track how a specific hashtag related to climate change gains momentum over time and maps out the global regions most engaged in this conversation. It can analyze tweets to understand public sentiment toward new government policies or gauge reactions to global events like the Olympics or the Oscars. By examining patterns in data, CSS helps predict trends and even the potential for societal shifts.

Furthermore, CSS can delve into more personal aspects of social behavior, such as how communities form around particular interests online, from knitting to quantum physics, and how these communities evolve over time.

In essence, CSS uses the power of computers not just to collect and analyze data, but to build comprehensive models of human social interaction and predict future behaviors and trends based on current observations. This deep understanding can aid in everything from marketing campaigns to policymaking, helping leaders make informed decisions that are in tune with the actual dynamics of their societies.

How Can LLMs Help in Computer Social Science?

LLMs can provide significant assistance in CSS by leveraging their advanced capabilities in natural language processing. Here’s how the abilities of LLMs align with the requirements of CSS tasks:

Enhanced Text Classification

LLMs can classify texts into various categories such as political ideology, sentiment, and topics without needing additional training data, which is vital for CSS research that spans diverse subjects.

Data Annotation Assistance

LLMs can serve as zero-shot annotators, providing preliminary labels or classifications for human experts to review. This can significantly speed up the annotation process in CSS.

Summarization and Explanation

LLMs can summarize large volumes of text and generate explanations for complex social phenomena, aiding researchers in making sense of vast amounts of data.

Question Answering

LLMs can answer specific questions about texts, offering insights that might otherwise require extensive manual analysis.

Detecting Social Biases

They can identify and analyze social biases in language, an important aspect of ensuring fairness in CSS research.

Human-AI Collaboration

LLMs can work alongside human researchers, combining the strengths of both to enhance the reliability and efficiency of CSS analysis.

Model Selection and Adaptation

Understanding the performance of different LLMs on CSS tasks can help researchers choose the most effective models for their specific needs.

How Well Does LLMs Perform at These Computational Social Science Tasks?

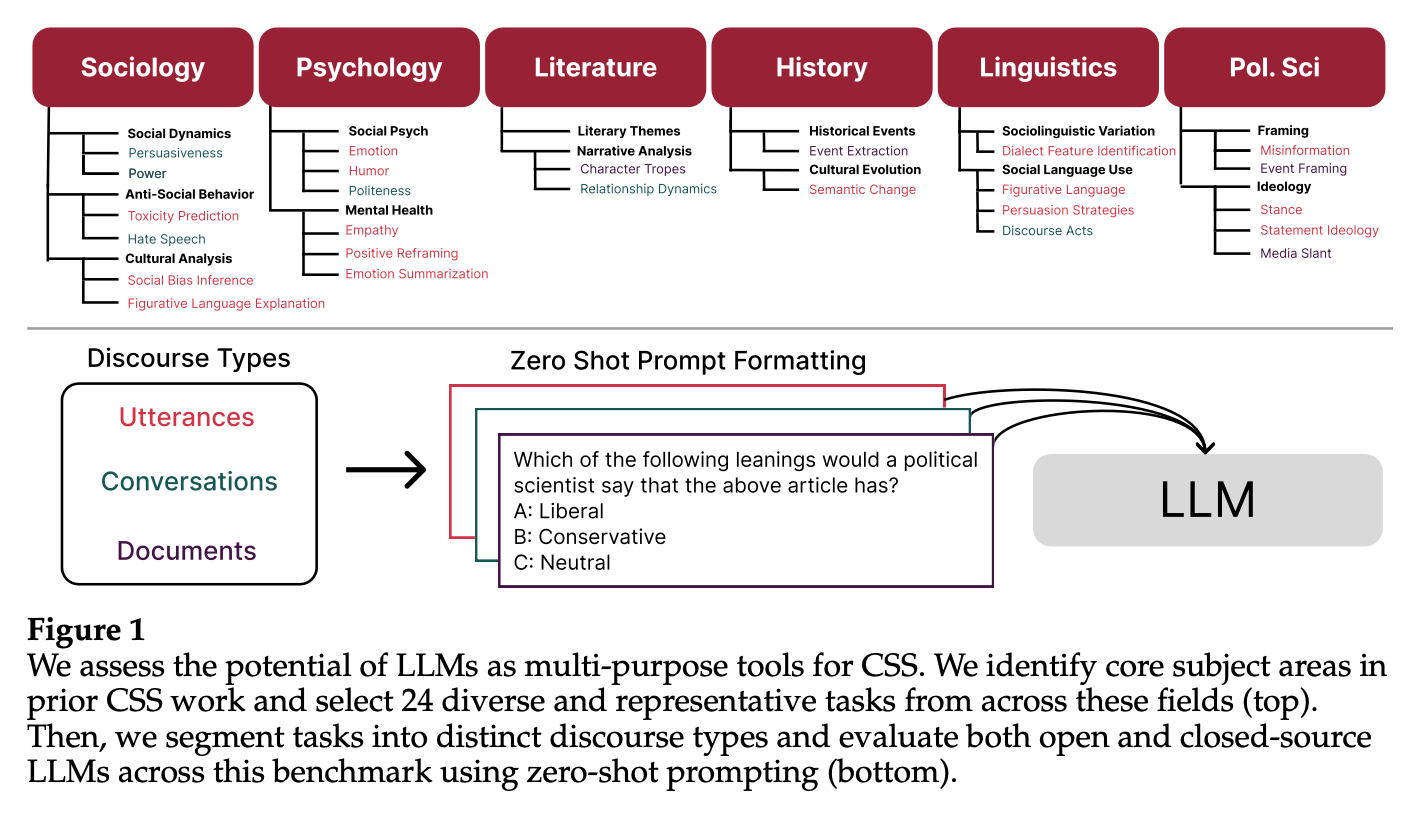

The empirical evidence from Ziems et al. (2024), titled “Can Large Language Models Transform Computational Social Science?”, provides a comprehensive evaluation of LLMs’ performance across a variety of computational social science tasks. The study involved an extensive evaluation pipeline that measured the zero-shot performance of 13 language models on 25 representative English CSS benchmarks. The results shed light on the strengths and limitations of LLMs in the context of CSS.

Performance in Classification Tasks

In taxonomic labeling tasks, which are akin to classification in the social sciences, LLMs did not outperform the best fine-tuned models but still achieved fair levels of agreement with human annotators (Ziems et al., 2024). Ziems et al. reported that for stance detection, the best zero-shot model achieved an F1 score of 76.0%, with a substantial agreement (κ = 0.58) with human annotations. This suggests that LLMs can be reliable partners in the annotation process, particularly when used in conjunction with human judgment.

Performance in Generation Tasks

LLMs demonstrated a remarkable capability in free-form coding tasks, which involve generation. They produced explanations that often exceeded the quality of crowdworkers’ gold references (Ziems et al., 2024). For instance, in the task of generating explanations for social bias inferences, the leading LLMs achieved parity with the quality of dataset references and were preferred by human evaluators 50% of the time. This indicates that LLMs can be valuable in creative generation tasks, such as explaining underlying attributes of a text.

Agreement with Human Annotators

An important metric for evaluating the performance of LLMs is their agreement with human annotators. Ziems et al. (2024) found that for 8 out of 17 classification tasks, models achieved moderate to good agreement scores ranging from κ = 0.40 to 0.65. This indicates that LLMs can be a viable tool for augmenting human CSS annotation, particularly in tasks with explicit and widely recognized definitions, such as emotion categories and political stances.

Error Analysis

Ziems et al. (2024) also conducted an error analysis, revealing that in some cases, the LLMs’ errors were due to annotation mistakes in the gold dataset rather than model deficiencies. This finding suggests that the integration of LLMs in the annotation process could potentially improve the quality of annotations by providing an additional layer of validation.

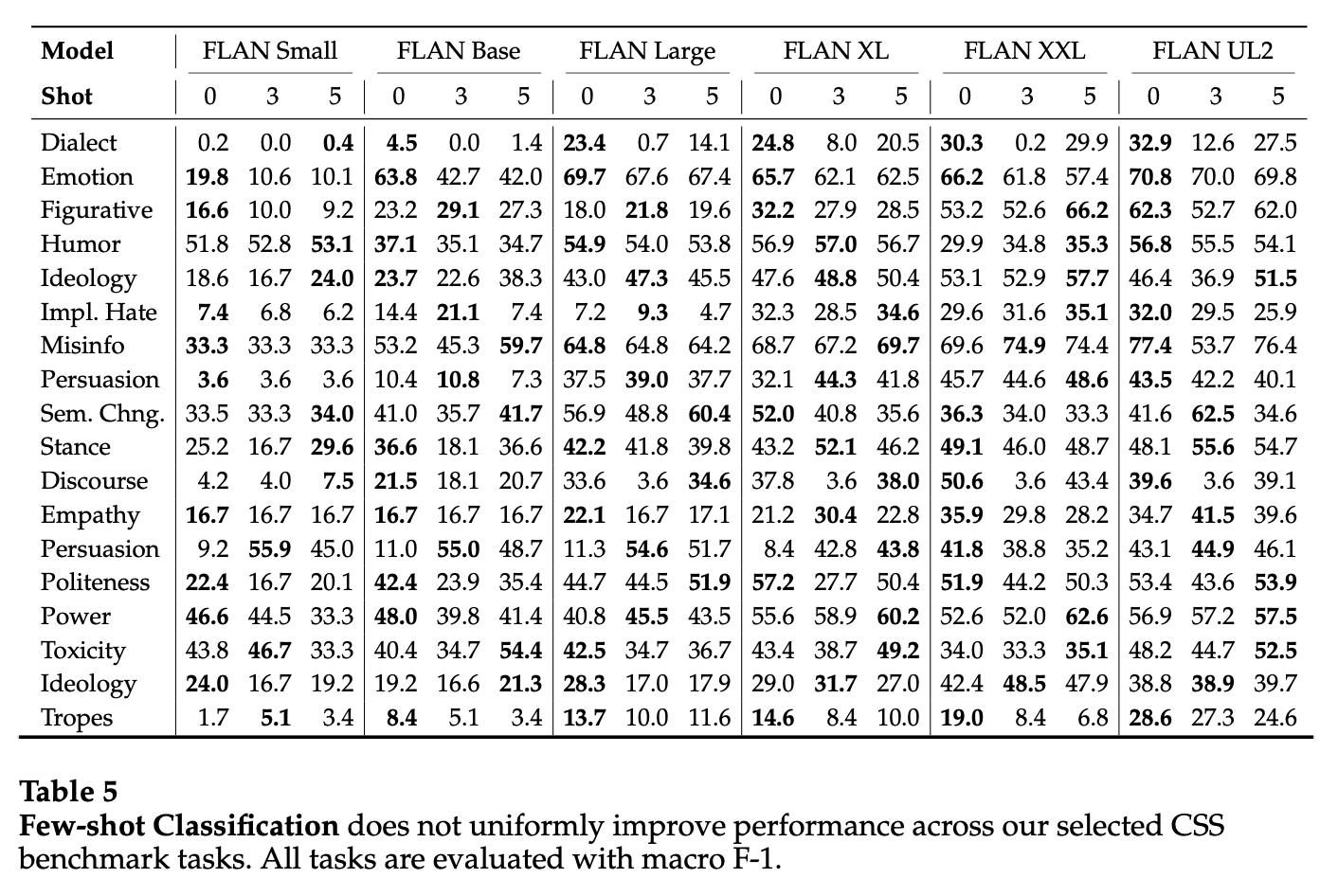

Few-Shot Learning Viability

The paper also explored the viability of few-shot learning, where LLMs are provided with a small number of examples to improve their performance on a task. The results were mixed, indicating that while few-shot learning can improve performance in some tasks, it does not uniformly enhance performance across all CSS tasks (Ziems et al., 2024).

In summary, the empirical evidence from Ziems et al. (2024) demonstrates that LLMs can be effective in performing various CSS tasks, particularly when used in conjunction with human annotators. While there is room for improvement, especially in tasks requiring complex understanding and generation, the results are promising and suggest that LLMs can be a valuable asset in the computational social science toolkit.

How to Use LLM APIs for My Own Computational Social Science Project?

To use LLM APIs for a CSS project, you should first identify the specific tasks that LLMs can assist with, such as classification, parsing, summarization, or generation.

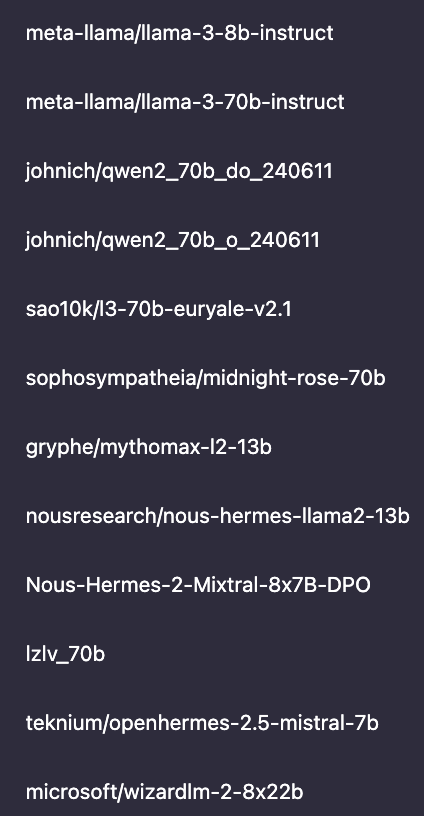

Second, you should then select an LLM that aligns with their project’s requirements, considering factors such as model size, pretraining data, and fine-tuning paradigms. Following the prompting best practices outlined by Ziems et al. (2024), you can design prompts to elicit the desired behavior from the model.

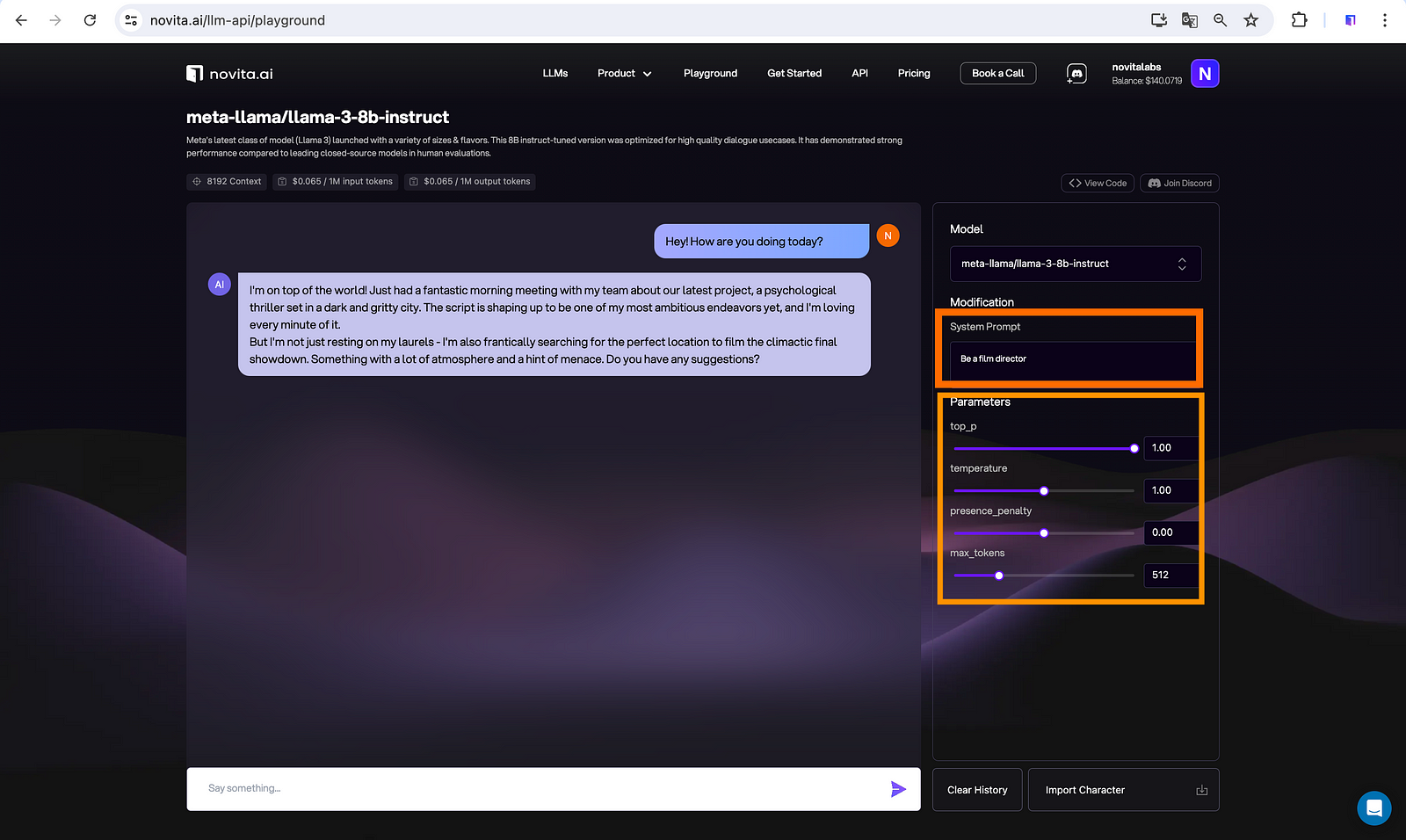

Novita AI provides developers with LLM API which is equipped with different LLM models. In this way, you can easily draw on a wide range of their strengths.

Moreover, Novita AI LLM API offers adjustable parameters and system prompt input to cater to your specific needs. For instance, to make your LLM answer your questions like a film director, just simply input “Be a film director”. As for the parameters, by simply changing the numbers, you can control aspects of the model’s output such as creativity, word repetition, response length, etc. Try it out yourself on our Playground!

Finally, it is also recommended to use a human-in-the-loop approach to validate and refine the outputs generated by LLMs. The approach promotes an iterative process of improvement, where human feedback is used to enhance the model’s performance, leading to more reliable and valid research outcomes. It also acknowledges the current limitations of LLMs in handling complex social data, such as conversations and full documents, and underscores the importance of human involvement in addressing these challenges and maintaining the ethical standards of research.

What Are the Future Directions of Large Language Models in Computational Social Science?

Augmenting Human Annotation

LLMs can serve as zero-shot data annotators, assisting human annotation teams by providing preliminary labels and summaries that can be reviewed and refined by human experts. This collaboration can increase the efficiency of data annotation processes.

Bootstrapping Creative Generation Tasks

LLMs have the potential to enhance tasks that require creative generation, such as explaining underlying attributes of a text or generating informative explanations for social science constructs.

Domain-Specific Adaptation

LLMs may be adapted to perform better in specific fields of science. Since model performance can vary across different academic disciplines, suggesting a need for domain-specific fine-tuning or model development.

Functionality Enhancement

LLMs are expected to improve in both classification and generation tasks. This includes assisting with labeling tasks as well as generating summaries and explanations for a range of social science phenomena.

Evaluation Methodology

The development of new evaluation metrics and procedures is needed to capture the semantic validity of free-form coding with LLMs, especially as they approach or exceed human performance in certain tasks.

Interdisciplinary Research

LLMs may enable new interdisciplinary research paradigms by combining the capabilities of supervised and unsupervised learning, thus allowing for more dynamic hypothesis generation and testing.

Simulation and Policy Analysis

LLMs can be used to simulate social phenomena and predict the effects of policy changes, although this comes with challenges related to the unpredictability of social systems and the need for careful validation.

Cross-Cultural Applications

Future research should explore the utility of LLMs for cross-cultural CSS applications, considering the diversity of languages and cultural contexts beyond the Western, Educated, Industrial, Rich, and Democratic (WEIRD) populations.

Conclusion

In the realm of computational social science, large language models are emerging as transformative tools that enhance how we analyze and interpret complex social data. These models facilitate tasks such as text classification, data annotation, and the generation of social behavior models, pairing AI’s computational power with human expertise to improve research accuracy and efficiency.

Empirical studies, like those by Ziems et al. (2024), indicate that while LLMs are not yet superior in all aspects, they perform commendably alongside human annotators in tasks such as sentiment analysis and social bias detection. This partnership suggests a promising avenue for both current and future CSS applications.

As we look forward, LLMs in CSS are poised to revolutionize the field by improving both the depth and scope of research, embracing more diverse social contexts, and refining methodologies. This burgeoning integration promises to make social science research more predictive, inclusive, and impactful, signaling a new era of computational analysis driven by both human insight and artificial intelligence.

References

Ziems, C., Held, W., Shaikh, O., Chen, J., Zhang, Z., & Yang, D. (2024). Can Large Language Models Transform Computational Social Science? Computational Linguistics, 50(1). https://arxiv.org/abs/2305.03514

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.