Boosting AI Development: TensorFlow and GPU Cloud Solutions

Introduction

Deep learning, a subfield of machine learning, is based on deep neural networks. In simple terms, it simulates the working principles of the human brain using computers. Recent years have witnessed significant advancements in deep learning across various domains, including image recognition, natural language processing, speech recognition, autonomous vehicles, and medical diagnosis.

What is TensorFlow?

Developed by the Google Brain team, TensorFlow is an open-source deep learning framework. Its flexibility, user-friendliness, efficiency, and robustness have made it one of the most popular tools in the field of deep learning.

As a powerful machine learning framework, TensorFlow employs a series of fundamental concepts to accomplish complex computational tasks. Firstly, it utilizes computational graphs to organize and represent these tasks. In such a graph, each node denotes a mathematical operation, while the edges between nodes represent data flow. This structure not only clearly illustrates the computational process but also enables parallel computing and optimization.

Data in TensorFlow exists in the form of tensors, which are multidimensional arrays serving as the basic unit of data. Tensors efficiently store and process large-scale data, forming the foundation for building deep learning models.

Moreover, TensorFlow provides a rich library of operations encompassing various mathematical computations required in deep learning. Whether it’s convolution, pooling, or activation functions, TensorFlow’s operation library offers corresponding support, greatly facilitating users in constructing and training their own models. Through these operations, users can effortlessly implement complex neural network architectures, driving the development of machine learning projects.

TensorFlow’s ease of use, flexibility, efficiency, and scalability have made it a popular framework in the field of deep learning. It not only provides simple and easy-to-use Python and C++ APIs, making it convenient for developers to get started quickly, but also boasts extensive documentation and community resources, providing comprehensive support for developers. TensorFlow supports multiple hardware platforms such as CPU, GPU, and TPU, allowing users to choose flexibly based on their actual needs. It also leverages highly optimized algorithms to fully utilize hardware resources, accelerating model training and inference processes. Additionally, TensorFlow supports distributed training, enabling multiple machines to work together to meet the computational demands of large-scale model training.

In the face of increasingly complex deep learning models and ever-growing data scales, TensorFlow has introduced various strategies to address the growing demand for computing power. To enhance training speed, TensorFlow utilizes GPUs for parallel computing, significantly reducing model iteration cycles. Meanwhile, TensorFlow supports distributed training, allowing for collaborative training on multiple GPU devices, further improving training efficiency and meeting the needs of larger-scale models and data. Moreover, TensorFlow provides dedicated support for Google’s self-developed TPUs, leveraging the more powerful computing power of TPUs to further accelerate deep learning tasks.

How GPU Cloud Solves the Deep Learning Computing Power Bottleneck

As a crucial branch of artificial intelligence, the development speed and innovation capacity of deep learning are largely constrained by computing power. With increasingly complex models and expanding datasets, traditional computing resources struggle to meet the demands of deep learning. In this context, GPU cloud services have emerged, providing an effective way to solve the computing power bottleneck.

The core advantage of GPU cloud services lies in their elastic scalability. Users can quickly increase or decrease GPU resources based on their needs, achieving optimal resource allocation. This flexibility not only optimizes costs but also makes resource utilization more efficient. Furthermore, cloud service providers typically deploy the latest GPU hardware specifically designed for parallel processing and high throughput, significantly boosting the training and inference speed of deep learning models.

By using GPU cloud services, users can free themselves from hardware maintenance and management concerns. Cloud platforms provide user-friendly interfaces and management tools, simplifying resource allocation and monitoring. This ease of management greatly reduces the technical threshold, enabling more researchers and developers to focus on model research and development instead of hardware maintenance.

Cost-effectiveness is another significant advantage of GPU cloud services. For small and medium-sized enterprises and individual researchers, the cost of purchasing and maintaining high-performance GPU hardware can be exorbitant. GPU cloud services allow for pay-as-you-go pricing, incurring costs only during actual usage, thereby reducing overall expenses. This on-demand pricing model ensures more economical and reasonable resource utilization.

The accessibility of GPU cloud services is also a major highlight. Users can access GPU cloud services from anywhere in the world with an internet connection. This remote access capability not only facilitates global collaboration and research but also creates possibilities for distributed computing and large-scale data processing.

Security and reliability are paramount for cloud service providers. They typically provide high-level data security and backup solutions, ensuring the safety of user data and the reliability of models. This provides a solid foundation for deep learning research and applications.

In practical applications, GPU cloud services find wide applications in research and development, large-scale training, real-time inference, and other scenarios. Researchers and developers can leverage GPU cloud services to rapidly iterate and test new deep learning models, process massive datasets, and achieve low-latency inference capabilities. The realization of these application scenarios further demonstrates the significant role of GPU cloud services in the field of deep learning.

By providing scalable, high-performance, and easy-to-manage computing resources, GPU cloud services effectively address the computing power bottleneck in deep learning, accelerating the development and application of artificial intelligence technologies.

TensorFlow on GPU Cloud

Installation

Installing TensorFlow on your local machine can be done with a single command:

pip install tensorflow-gpu # Installs the latest version by defaultOf course, if you want to install the CPU version, run the following command:

pip install tensorflow # Installs the latest version by defaultVerifying the Installation

import tensorflow as tf

print("Num GPUs Available: ", len(tf.config.experimental.list_physical_devices('GPU')))One-Click Launch

While installing TensorFlow only requires one command, you need to do a lot of preparation beforehand.

For instance, you need to install or verify your Python version, ensure your machine is updated with compatible drivers, and check if CUDA is working correctly. Sounds troublesome, right?

Thanks to the emergence of container technology, developers are liberated from these tedious tasks.

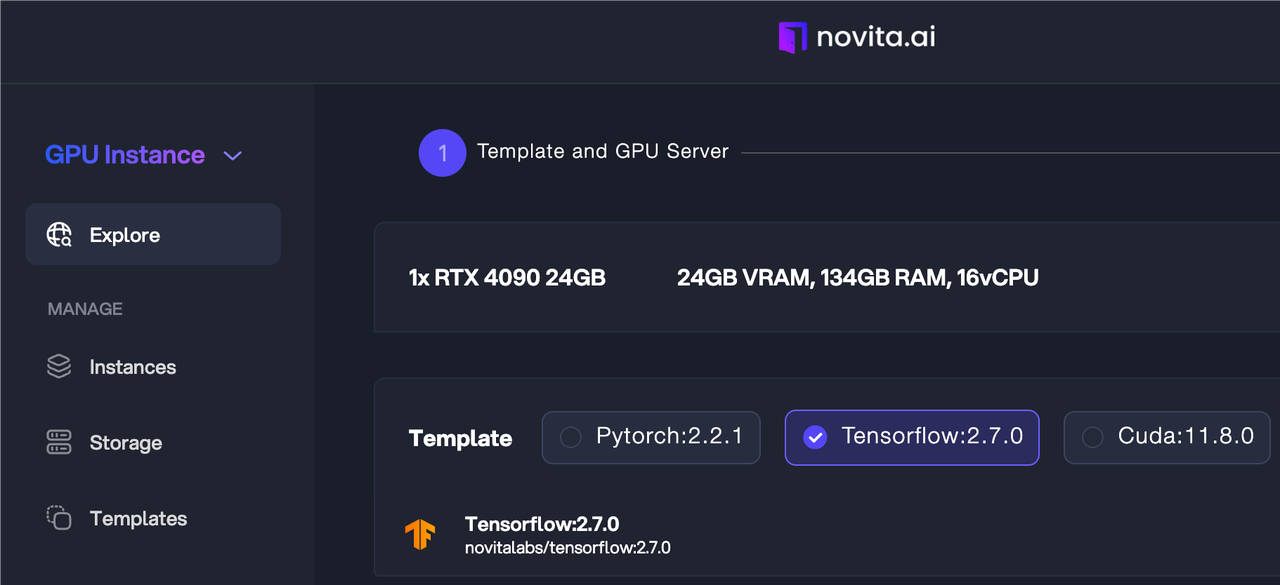

For example, on Novita AI, you can directly choose the TensorFlow template, and in just a few seconds, your required development environment is all set.

Our engineers have already taken care of everything for you; all you need to do is focus on your business, and leave the rest for us.

Outlook

The advent of cloud computing has provided developers with almost unlimited computing power, which was unimaginable in the past. In recent years, artificial intelligence technology has swept the globe, and more and more systems are trying to leverage AI capabilities to reshape their businesses.

Novita AI is committed to providing developers with one-stop AI solutions, accelerating the arrival of the AI era.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading

Guide to Install TensorFlow & PyTorch on RTX 3080

Leveraging PyTorch CUDA 12.2 by Renting GPU in GPU Cloud