All You Need to Know about Automatic Chain of Thought Prompting in Large Language Models

Introduction

What is automatic Chain of Thought Prompting in large language models? In this blog, we will break this question into small pieces, starting from the definition of Chain of Thought (CoT) Prompting, to the advantages and development of Auto CoT. Finally, we will discuss LLM API as being a core part of applying Auto CoT. Stay tuned to explore the powerful Auto CoT!

What Is CoT Prompting?

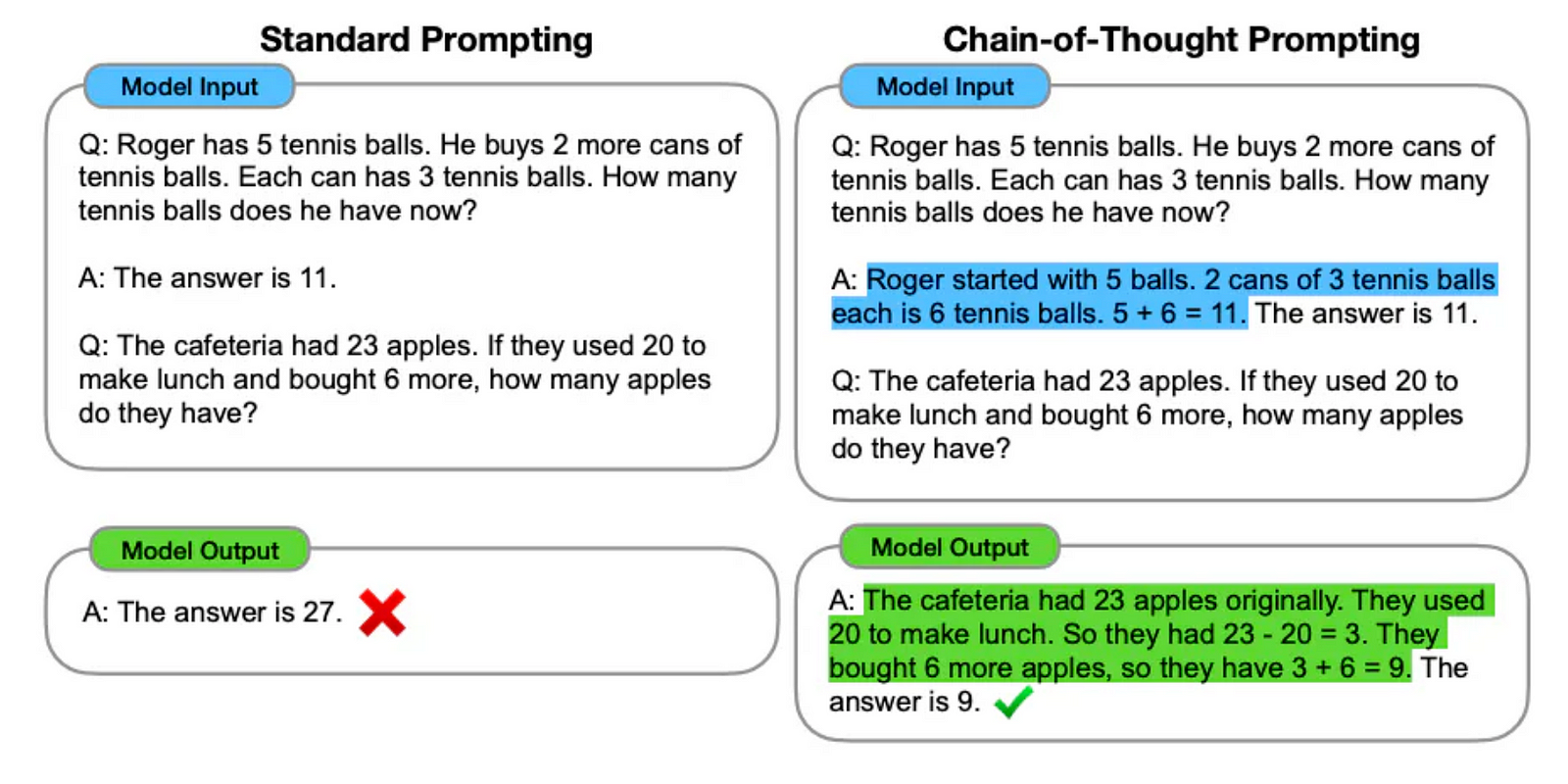

Chain-of-Thought (CoT) Prompting is a technique used to enhance the reasoning capabilities of large language models (LLMs). LLMs, such as GPT-3, have shown remarkable performance on a variety of tasks, including question-answering, text generation, and problem-solving.

However, in many complex reasoning tasks, LLMs may struggle to provide a complete and coherent step-by-step solution. CoT Prompting aims to address this by eliciting the language model to generate a “chain of thought” — a sequence of intermediate reasoning steps that lead to the final answer.

The core idea behind CoT Prompting is to prompt the language model to explicitly think through a problem, rather than just providing a direct answer. This is typically done by including a prompt like “Let’s think through this step-by-step” or “Explain your reasoning” alongside the input question or problem. CoT Prompting can lead to more accurate and explainable outputs, particularly for complex, multi-step tasks.

Why Do We Need Auto CoT?

The key issue is that there are two main approaches to chain-of-thought (CoT) prompting, and both have significant drawbacks.

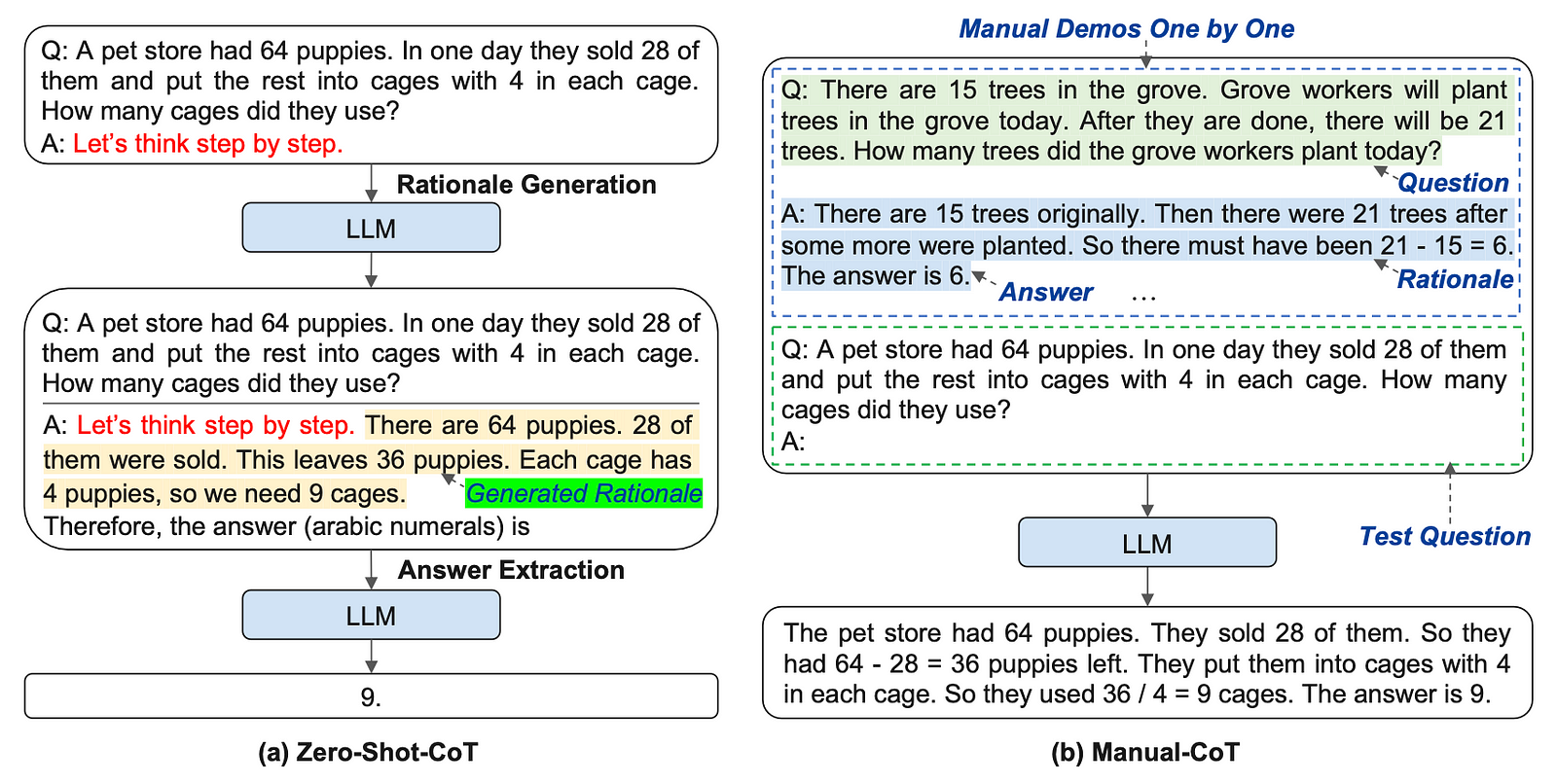

Limitations of Zero-Shot-CoT

In this approach, you simply give the language model (LM) a question and ask it to “think step-by-step” to arrive at the answer. The advantage is that it’s very easy to use — you don’t need to provide any additional information or examples. However, the big downside is that the LM’s step-by-step reasoning can often be flawed or contain mistakes. So the final answer may not be reliable.

Limitations of Manual-CoT

This approach involves manually creating detailed examples for the LM, showing it how to break down a problem into logical steps and arrive at the correct answer. By providing these carefully crafted examples, the LM can then use that knowledge to better solve new questions. The benefit is that the LM’s reasoning is more robust and accurate when it can refer to the manual examples. But the major drawback is that creating these detailed examples is extremely time-consuming and requires a lot of human effort and expertise. It’s not scalable at all.

Overcoming Limitations with Auto-CoT

So in summary, Zero-Shot-CoT is easy but unreliable, while Manual-CoT is more robust but very labor-intensive. This is the key challenge the authors are trying to address with their proposed “Auto-CoT” approach.

The core idea of Auto-CoT advocated by some scholars is to automatically generate the example demonstrations that the LM can use, without requiring manual human effort. This could potentially combine the benefits of both the existing approaches — reliable reasoning, but in a more scalable way.

How did people develop Auto CoT?

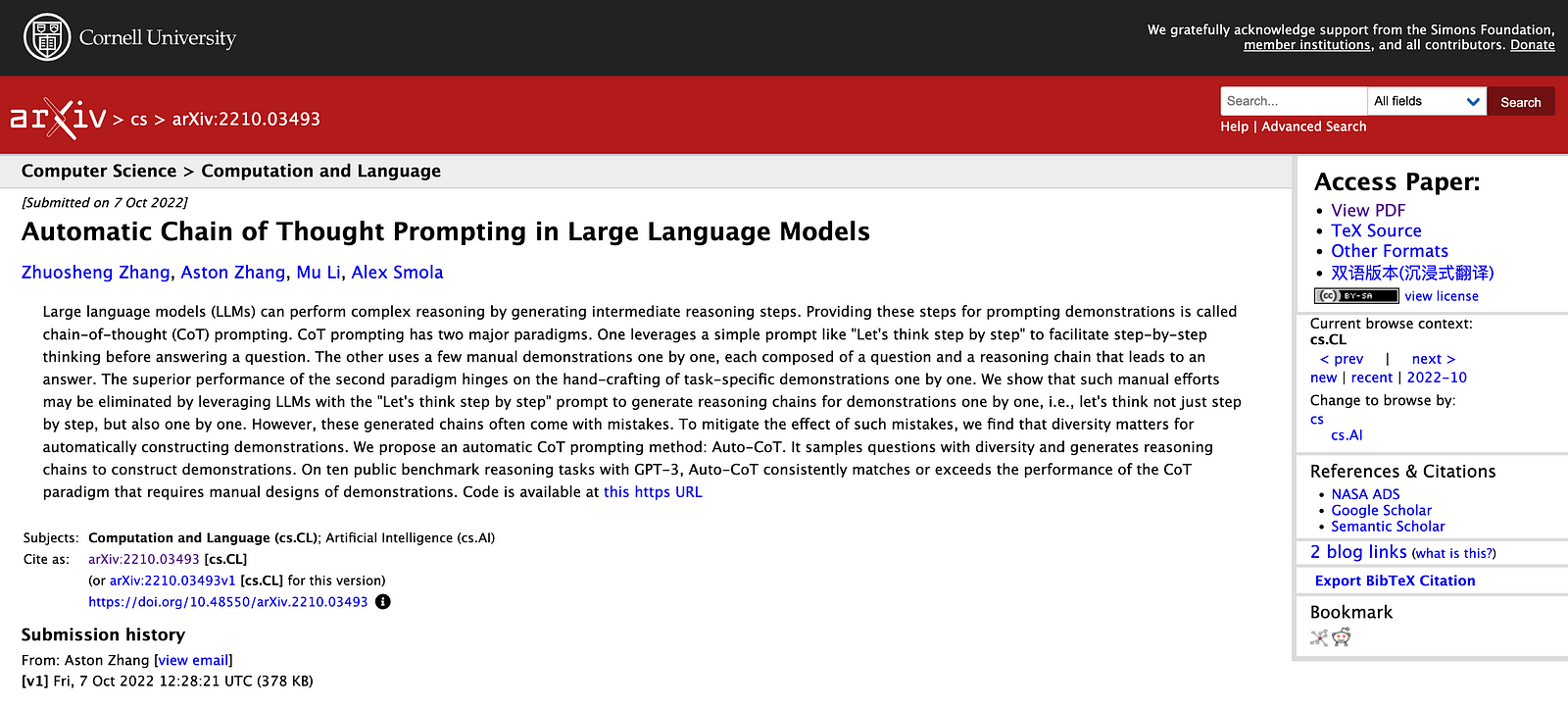

In this section, we are going to explore the details of the paper titled “Automatic Chain of Thought Prompting in Large Language Models” by Zhuosheng Zhang, Aston Zhang, Mu Li, and Alex Smola published in 2022. If you are not interested in research details, feel free to skip to the next section.

Proposed Approach

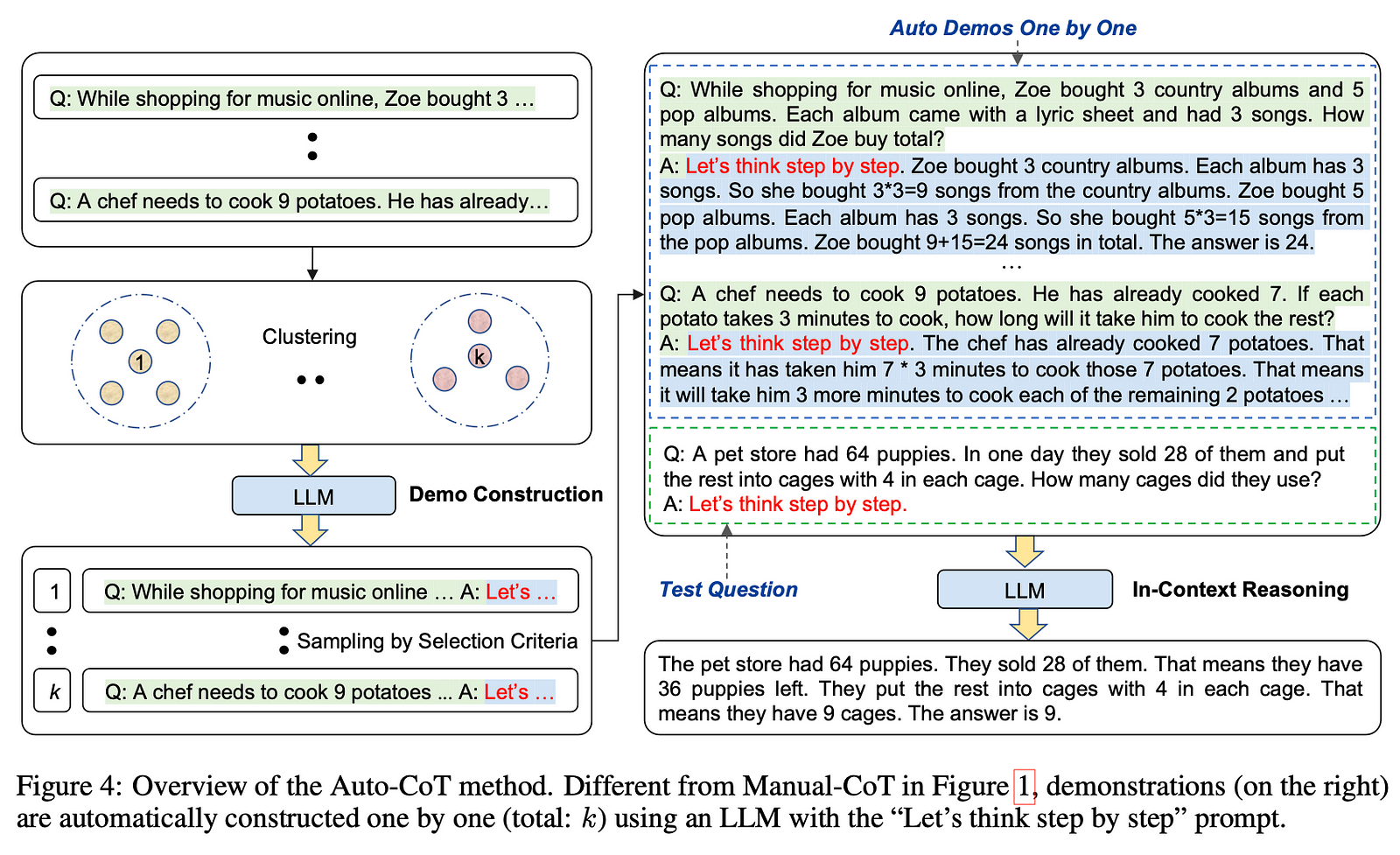

To overcome these limitations of existing CoT approaches, the authors propose an “Auto-CoT” paradigm that automatically constructs demonstrations for CoT prompting.

The key steps are:

- Leveraging LLMs with the “Let’s think step by step” prompt to generate reasoning chains for demonstration questions.

- Recognizing that the generated reasoning chains may contain mistakes, the authors focus on ensuring diversity in the selected demonstration questions.

- The authors develop a two-step approach to automatically construct demonstrations:

a. Partition the dataset questions into clusters based on similarity.

b. Select a representative question from each cluster and generate its reasoning chain using Zero-Shot-CoT.

Evaluation

The authors evaluate the Auto-CoT approach with GPT-3 on ten benchmark reasoning tasks, including arithmetic, commonsense, and symbolic reasoning. They compare the performance against the Zero-Shot-CoT and Manual-CoT paradigms.

Key Findings

The results show that the Auto-CoT approach consistently matches or exceeds the performance of the Manual-CoT paradigm, which requires manual design of demonstrations. This demonstrates that LLMs can perform effective CoT reasoning without the need for manual efforts.

How Does Auto CoT Work?

The key idea behind Auto-CoT is to automatically generate the demonstration examples that the language model (LM) can use for chain-of-thought (CoT) prompting, rather than relying on manually crafted demonstrations.

Here’s how the Auto-CoT approach works, step-by-step:

Step 1 Question Clustering:

- The first step is to take the set of test questions (the questions the LM will be evaluated on & pre-existing questions from the standard benchmark datasets) and group them into a few clusters based on their similarity.

- This clustering helps ensure the demonstration questions cover diverse types of problems, rather than being too similar.

Step 2 Demonstration Generation:

- For each cluster of questions, Auto-CoT selects a representative question from that cluster.

- It then uses the “Let’s think step-by-step” prompt to ask the LM to generate a reasoning chain for that representative question.

- This reasoning chain, consisting of the intermediate steps and the final answer, becomes the demonstration example for that cluster.

Step 3 Prompting the LM:

- When evaluating the LM on a new test question, Auto-CoT provides the LM with the set of automatically generated demonstration examples.

- The LM can then use these demonstrations to guide its own step-by-step reasoning process to arrive at the answer for the test question.

How Can I Use Auto CoT?

Requirements:

- Python version 3.8 or later

Installation:

- Install the required PyTorch and torchtext packages using the specified versions and PyPI URL:

pip install torch==1.8.2+cu111 torchtext==0.9.2 -fhttps://download.pytorch.org/whl/lts/1.8/torch_lts.html - Install the other requirements by running

pip install -r requirements.txt

Datasets:

Download the datasets from the following GitHub repositories:

- https://github.com/kojima-takeshi188/zero_shot_cot/tree/main/dataset

- https://github.com/kojima-takeshi188/zero_shot_cot/tree/main/log

Quick Start:

See the try_cot.ipynb notebook for a quick start guide.

Instructions:

Construct Demos:

- Run the following command to construct demos for the “multiarith” task:

python run_demo.py --task multiarith --pred_file log/multiarith_zero_shot_cot.log --demo_save_dir demos/multiarith

Run Inference:

- Run the following command to run inference on the “multiarith” dataset:

python run_inference.py --dataset multiarith --demo_path demos/multiarith --output_dir experiment/multiarith

Citing Auto-CoT:

If you use Auto-CoT in your work, please cite the following paper:

@inproceedings{zhang2023automatic,

title={Automatic Chain of Thought Prompting in Large Language Models},

author={Zhang, Zhuosheng and Zhang, Aston and Li, Mu and Smola, Alex},

booktitle={The Eleventh International Conference on Learning Representations (ICLR 2023)},

year={2023}

}

LLM API as a Core Part of Applying Auto-CoT

What Are the Benefits of Combining Auto-CoT With LLM APIs?

- Access to Powerful Language Models:

- Auto-CoT relies on the capabilities of large language models to generate step-by-step reasoning chains and produce accurate outputs.

- By integrating LLM APIs, researchers and developers can leverage the latest and most powerful language models, such as GPT-3, Megatron-LLM, or InstructGPT, to power the Auto-CoT system.

2. Flexibility and Customization:

- Different language models may have varying strengths, biases, and capabilities. Integrating LLM APIs allows users to experiment with and compare the performance of different models for their specific tasks and applications.

- This flexibility enables researchers to fine-tune and customize the language models to their needs, improving the overall effectiveness of the Auto-CoT system.

3. Scalability and Deployment:

- LLM APIs often provide scalable and reliable infrastructure for serving and deploying language models, allowing Auto-CoT systems to handle increased workloads and serve a larger user base.

- By leveraging the scaling capabilities of LLM APIs, researchers and developers can more easily deploy and maintain the Auto-CoT system in production environments.

4. Continuous Model Improvements:

- Language models are rapidly evolving, with new and improved versions being released frequently. Integrating LLM APIs enables Auto-CoT systems to benefit from these advancements and stay up-to-date with the latest language model capabilities.

- This ensures that the Auto-CoT system can continue to deliver high-quality results and maintain its competitiveness as the field of language models progresses.

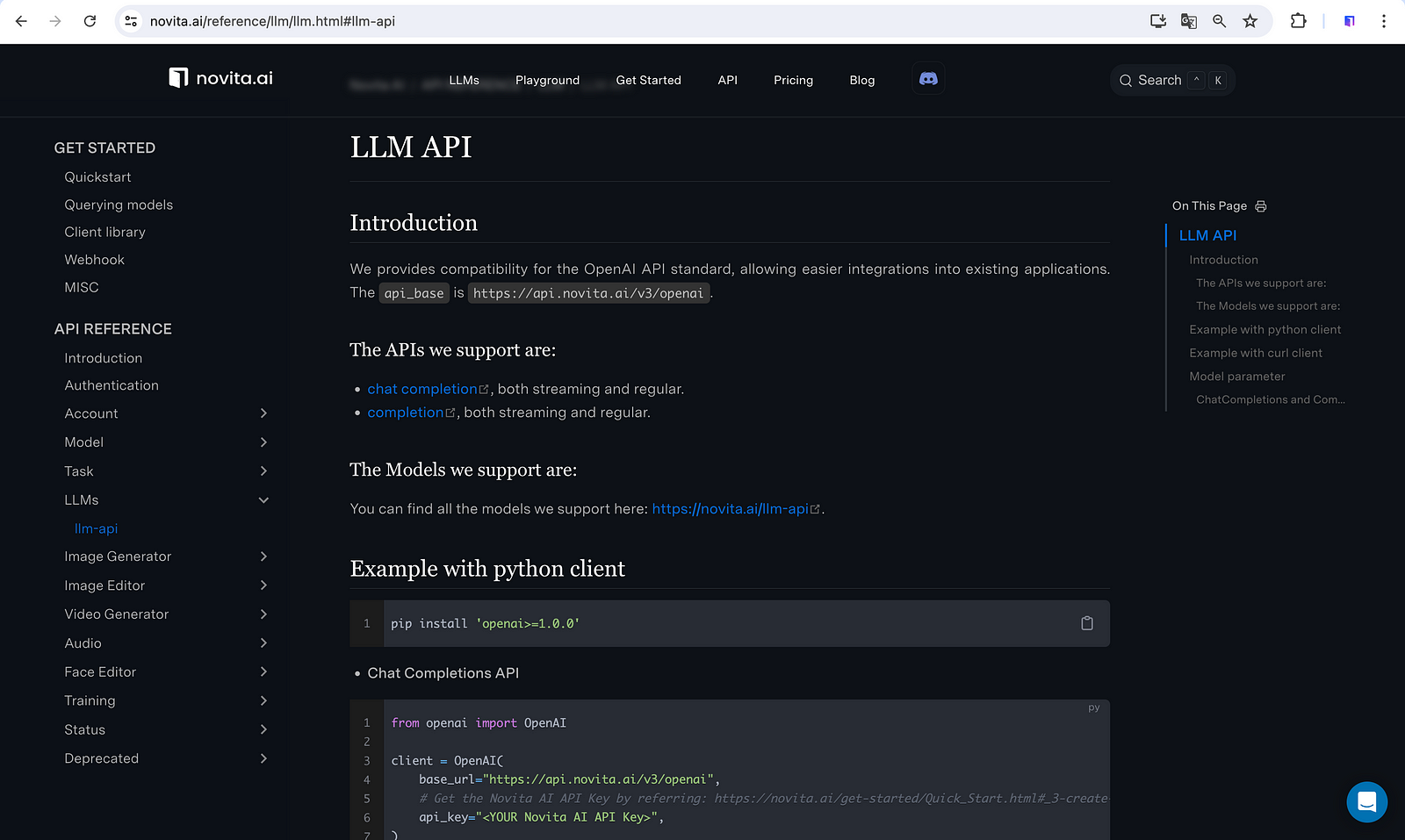

How to Integrate LLM API to My Project?

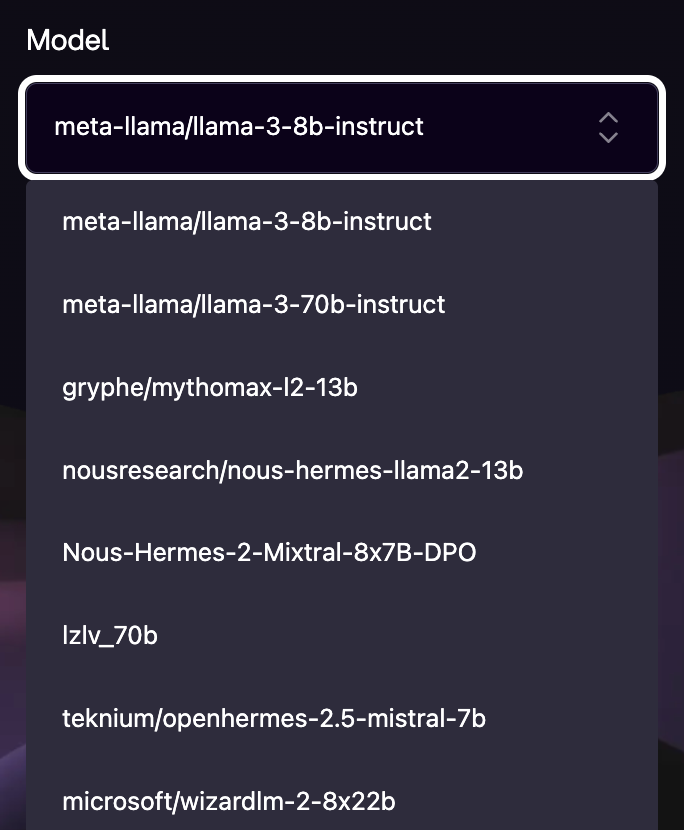

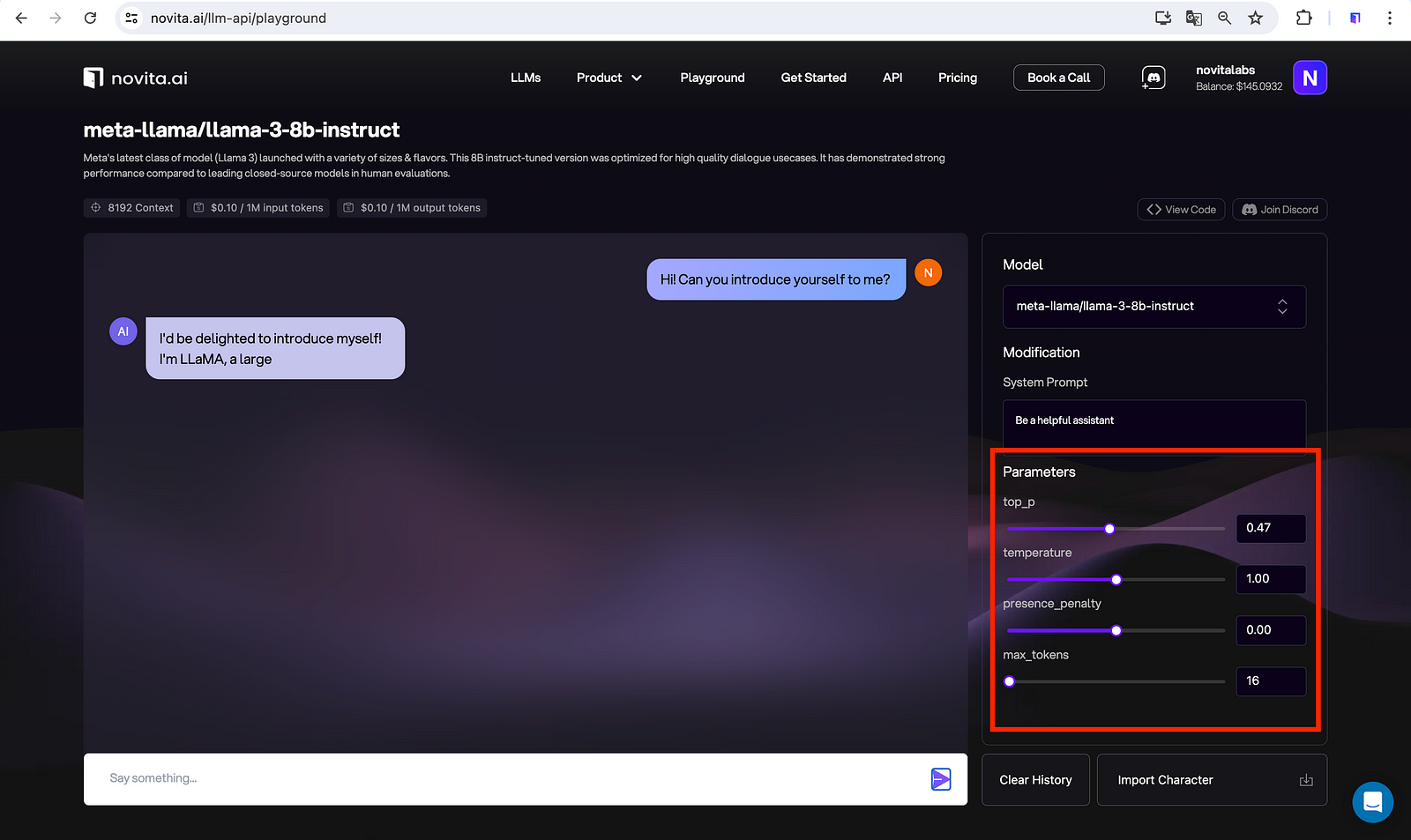

Novita AI provides users with LLM API with many models to call, including newly released llama-3–8b and llama-3–70b. You can try different models and compare their performance on our Playground for free before integrating our LLM API.

Moreover, to cater to customized needs, you can adjust key parameters like temperature (controls the randomness and exploration of the model’s output), top_p (an alternative to sampling with temperature, called nucleus sampling, where the model considers the results of the tokens with top_p probability mass.), presence_penalty (encourages the model to produce text that is different from what it has generated before), and maximum tokens (sets the maximum length of the model’s generated output) to optimize the model’s outputs for your specific application requirements. This level of tailoring allows you to fully combine the capabilities of LLM with your Auto-CoT systems.

You can visit our website for more information about LLM API, including the code instructions for integration, pricing and other features.

Conclusion

In this blog, we explored the concept of Chain of Thought (CoT) Prompting and the need for an automated approach called Auto-CoT. While existing CoT methods have limitations, the Auto-CoT approach aims to automatically generate demonstration examples to guide language models in step-by-step reasoning, without requiring manual effort. We discussed the key steps of Auto-CoT, including question clustering and demonstration generation. Finally, we highlighted how integrating LLM APIs can provide powerful and flexible language models to power the Auto-CoT system, leading to improved performance, scalability, and continuous model improvements. Overall, Auto-CoT represents an exciting development in enhancing the reasoning capabilities of large language models.

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.