AI Answer Questions Made Easy: Practical Tips for Success

Introduction

Have you ever wondered how AI can understand and answer questions just like a human? What are the underlying technologies that make this possible? How to evaluate the performances of AI answering questions? With what techniques can AI’s performance be enhanced? Last but not least, what are the top LLM APIs that can help leverage the power of AI in answering questions?

In this blog, we’ll dive into these questions one by one. Get ready to uncover the secrets behind AI’s ability to engage in meaningful dialogues and provide insightful responses.

Understanding AI Answer Questions

Answering Questions: One Major Ability of AI

Answering questions is a core capability of artificial intelligence, particularly in the field of natural language processing (NLP). NLP allows AI systems to understand, interpret, and generate human language, enabling them to engage in meaningful dialogues and provide informative responses to a wide range of questions.

Beyond question answering, AI systems have a diverse set of abilities that leverage similar underlying machine learning and deep learning mechanisms to process and interpret various types of data. For example, the same natural language understanding techniques used to comprehend and respond to textual questions can also be applied to analyze and extract insights from audio signals, such as in voice assistants and speech recognition systems.

Similarly, the computer vision and image processing capabilities of AI rely on deep learning algorithms and neural networks that can identify patterns, classify objects, and even generate captions or descriptions of the contents of an image. These abilities have enabled AI systems to excel in tasks such as image recognition, object detection, and scene understanding.

The Evolution of AI in Answering Services

In the early days, question answering systems relied on predetermined responses and limited knowledge bases, often providing scripted or narrow responses to user queries.

However, as AI technology has advanced, by leveraging large language models, deep learning algorithms, and expansive knowledge bases, modern AI-powered answering services can draw upon a vast amount of data, from structured databases to unstructured text, to understand the context and intent behind a user’s question. They can then formulate comprehensive responses by synthesizing relevant information and presenting it in a clear and coherent manner.

How AI Processes and Understands Natural Language

Explanation of Neural Network

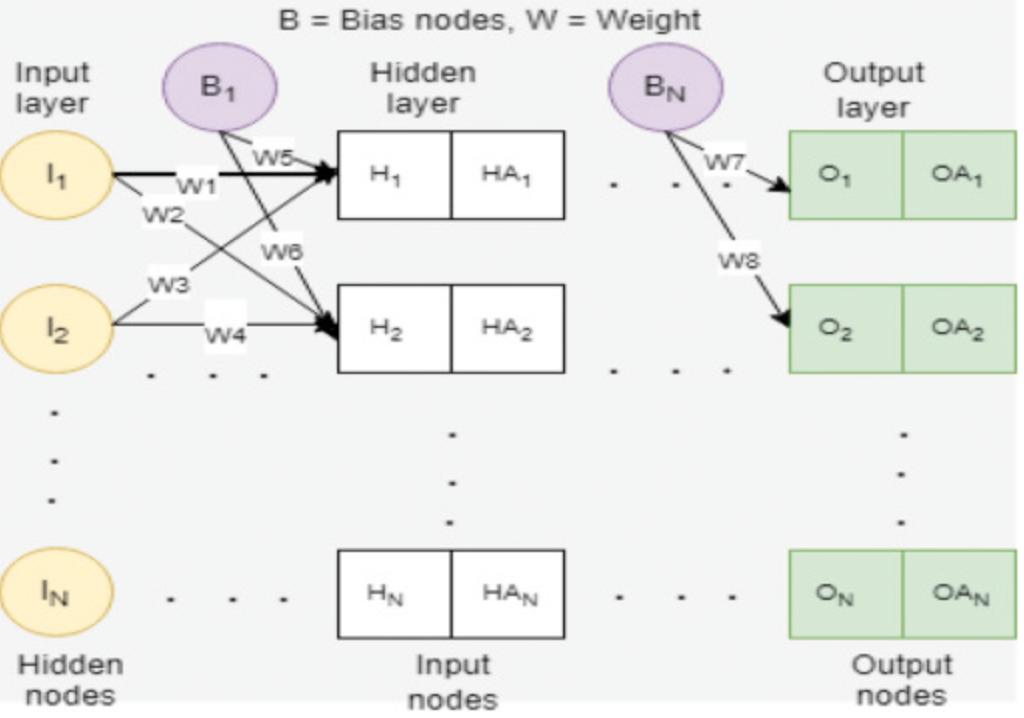

At the core of an AI system’s ability to comprehend and respond to natural language lies a complex set of machine learning techniques and architectures. Central to this process are neural networks, which are inspired by the biological structure of the human brain and its interconnected neurons.

Neural networks, with their layers of interconnected nodes, are capable of learning to recognize patterns and extract meaningful features from large datasets of natural language, such as text corpora and conversational data. As the network is trained on this data, it develops an increasingly sophisticated understanding of the nuances of human language, including grammatical structures, semantic relationships, and contextual cues.

Explanation of Transformer Architecture

A particularly influential advancement in natural language processing (NLP) has been the development of transformer architectures, which have revolutionized the way AI systems process and comprehend language. Transformers, unlike traditional recurrent neural networks, are able to capture long-range dependencies and relationships within text, allowing for a more holistic and contextual understanding of language.

The transformer architecture is characterized by its use of attention mechanisms, which enable the model to focus on the most relevant parts of the input when generating an output. This allows for a more dynamic and adaptive processing of language, where the model can prioritize and weigh different elements of the text based on their significance to the task at hand.

How to Evaluate AI Answering Questions

Knowledge and Language Understanding

- Massive Multitask Language Understanding (MMLU): Measures general knowledge across 57 different subjects.

- AI2 Reasoning Challenge (ARC): Tests language models on grade-school science questions requiring reasoning.

- General Language Understanding Evaluation (GLUE): Assesses language understanding abilities across various contexts.

- Natural Questions: Evaluates the ability to find accurate answers from web-based sources.

Reasoning Capabilities

- GSM8K: Tests the language model’s ability to work through multistep math problems.

- Discrete Reasoning Over Paragraphs (DROP): Evaluates the ability to understand complex texts and perform discrete operations.

- Counterfactual Reasoning Assessment (CRASS): Assesses the language model’s counterfactual reasoning abilities.

- Large-scale ReAding Comprehension Dataset From Examinations (RACE): Tests understanding of complex reading material and ability to answer examination-level questions.

- Big-Bench Hard (BBH): Evaluates the upper limits of AI capabilities in complex reasoning and problem-solving.

- AGIEval: Assesses language models’ reasoning abilities and problem-solving skills across academic and professional standardized tests.

- BoolQ: Tests the ability to infer correct answers from contextual information.

Multi Turn Open Ended Conversations

- MT-bench: Evaluates the language model’s performance in multi-turn open-ended conversations.

- Question Answering in Context (QuAC): Assesses the ability to engage in contextual question-answering.

Grounding and Abstractive Summarization

- Ambient Clinical Intelligence Benchmark (ACI-BENCH): Evaluates the language model’s performance in medical applications.

- MAchine Reading COmprehension Dataset (MS-MARCO): Assesses the ability to comprehend and summarize web-based information.

- Query-based Multi-domain Meeting Summarization (QMSum): Tests the language model’s capacity to summarize multi-domain meeting conversations.

- Physical Interaction: Question Answering (PIQA): Evaluates the language model’s understanding of physical interactions and ability to answer related questions.

Content Moderation and Narrative Control

- ToxiGen: Assesses the language model’s ability to generate non-toxic content.

- Helpfulness, Honesty, Harmlessness (HHH): Evaluates the language model’s safety and reliability in providing helpful and honest responses.

- TruthfulQA: Tests the language model’s truthfulness and ability to avoid generating false information.

- Responsible AI (RAI): Assesses the language model’s adherence to principles of responsible and ethical AI.

Coding Capabilities

- CodeXGLUE: Evaluates the language model’s coding and programming abilities.

- HumanEval: Tests the language model’s capacity to solve programming problems.

- Mostly Basic Python Programming (MBPP): Assesses the language model’s ability to write basic Python code.

Practical Tips for Interacting with AI Answering Systems

General Tips for Prompts

- Start with Basics: Begin with straightforward prompts and progressively add complexity as you refine your approach for better outcomes.

- Use Directives: Frame your prompts with clear commands to guide the AI in the desired action, such as writing, classifying, or summarizing. Employ separators for clarity between instructions and context.

- Be Descriptive: Provide detailed and specific instructions to help the AI understand the expected result or style of generation you’re aiming for.

- Precision Over Cleverness: Opt for clear and direct prompts to avoid ambiguity and ensure the message is effectively communicated to the AI.

- Focus on Affirmative Actions: Instead of stating what to avoid, specify what actions to take to elicit the best responses from the AI.

- Include Examples: Examples within prompts can be instrumental in guiding the AI to produce the format you’re looking for.

- Iterate and Experiment: Continuously test and adjust your prompts to optimize them for your specific applications.

Suggested Prompt Techniques

Zero-shot Prompting

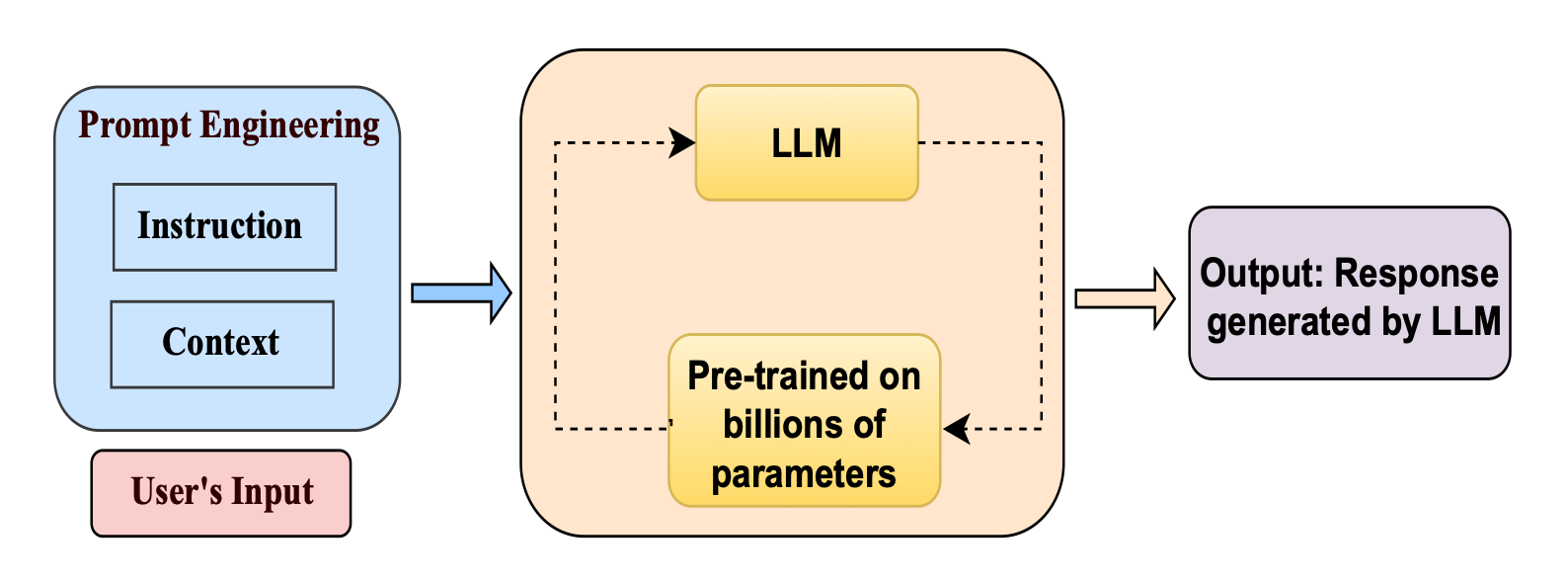

Zero-shot prompting is an interaction technique with large language models (LLMs) that leverages their extensive training on diverse datasets to perform tasks without the need for additional examples or demonstrations. It’s a capability where the model is given a direct instruction to execute a task, and it relies on its pre-existing knowledge to carry out the task effectively.

For instance, consider a scenario where the task is text classification, specifically sentiment analysis. A zero-shot prompt might simply ask the model to classify the sentiment of a given text. The prompt could be straightforward, such as:

Prompt: “Classify the sentiment of this statement: ‘I love Mondays.’”

Few-shot Prompting

Few-shot prompting is a technique designed to enhance the performance of large language models on complex tasks by providing them with a small set of demonstrations or examples. This method allows the model to learn from these examples and apply the learned patterns to new, unseen tasks, effectively steering the model towards better performance.

For instance, in a study by Brown et al. 2020, the task was to use a new word correctly in a sentence. By giving the model just one example (1-shot), it was able to understand and perform the task. However, for more challenging tasks, increasing the number of examples can be beneficial, such as 3-shot, 5-shot, or even 10-shot prompting.

Chain-of-Thought Prompting

Chain-of-Thought (CoT) prompting is an advanced technique that enhances a language model’s ability to perform complex reasoning tasks by explicitly showing the intermediate steps of reasoning.

Another variation of CoT prompting is Zero-shot CoT. This approach involves adding a simple instruction like “Let’s think step by step” to the original prompt, which encourages the model to perform the reasoning process even without specific examples. For instance:

Prompt: “What is the result of 15 + 7? Let’s think step by step.”

The model might respond by breaking down the addition into more manageable steps:

Output:

- Start with the first number: 15.

- Add the second number, which is 7.

- Since 15 and 7 are both single-digit numbers, we can add them directly.

- The sum of 15 and 7 is 22.

Self-Consistency

Instead of relying on a single, possibly flawed, reasoning path, self-consistency leverages the power of sampling multiple diverse reasoning paths. By doing so, it selects the most consistent answer from these paths, which can significantly improve the model’s performance on tasks that require arithmetic and commonsense reasoning.

Here’s a simple example to illustrate the concept of self-consistency:

Prompt: “A farmer has a certain number of chickens and cows. Chickens give eggs. Calculate the total number of eggs the farmer gets each day.”

The model might provide different outputs:

- Output 1: “The farmer gets 24 eggs each day because there are 12 chickens and each gives 2 eggs.”

- Output 2: “The total number of eggs is 24, as calculated by multiplying 12 chickens by 2 eggs each.”

- Output 3: “The calculation for the eggs is 12 times 2, which equals 24.”

From these outputs, we can see that there is a clear majority consensus on the answer being 24 eggs. This majority answer would then be selected as the final, more reliable result.

Tree of Thoughts

Tree of Thoughts is a prompting technique designed to enhance the reasoning capabilities of large language models. It is particularly useful for complex tasks that require a hierarchical or structured approach to problem-solving. ToT prompts the model to break down a problem into smaller sub-problems and then solve each sub-problem in a step-by-step manner, similar to how branches grow from the trunk of a tree.

For more prompt techniques, you can visit “Prompt Engineering Guide” Website.

Top LLM APIs for AI Question Answering

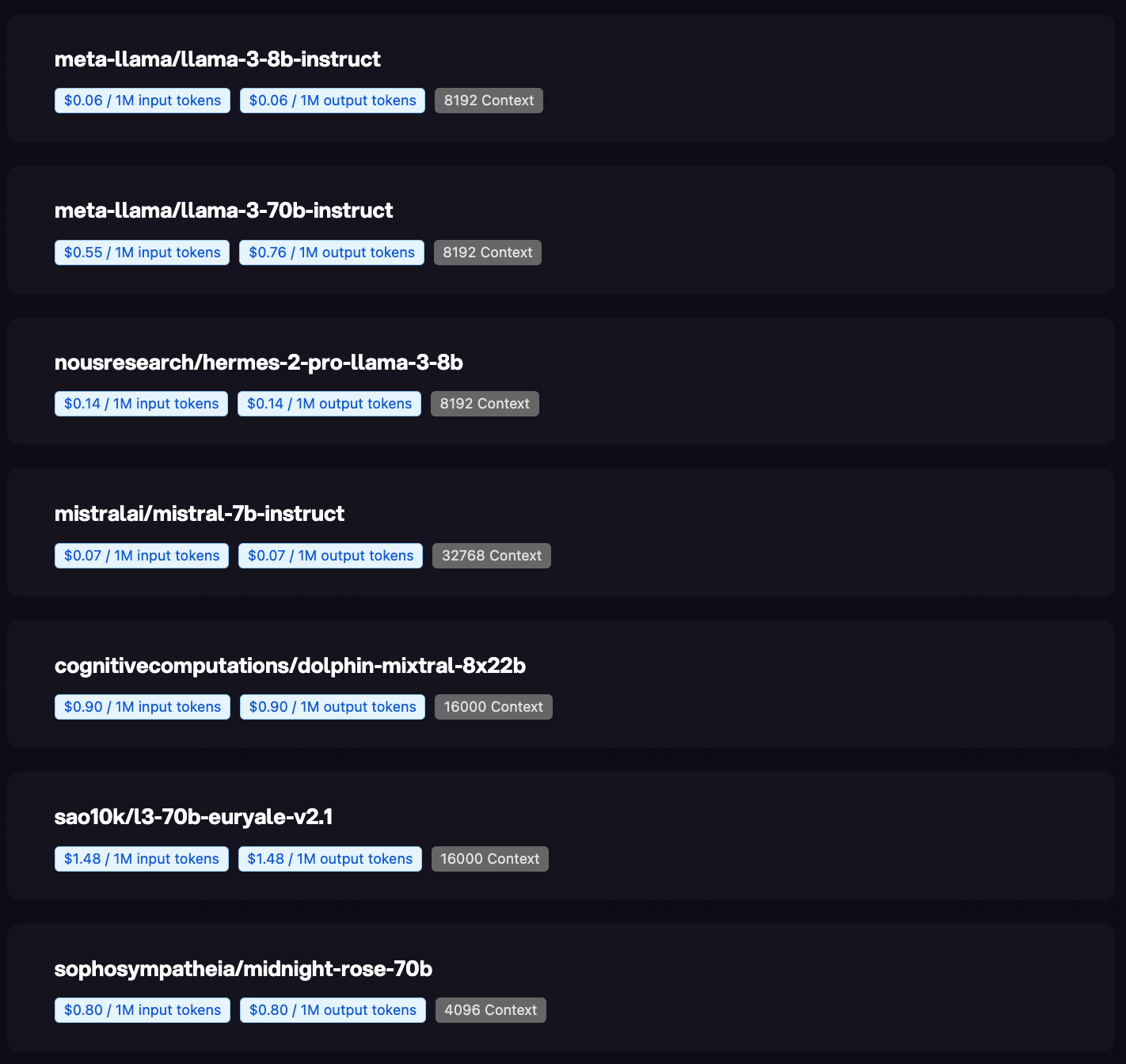

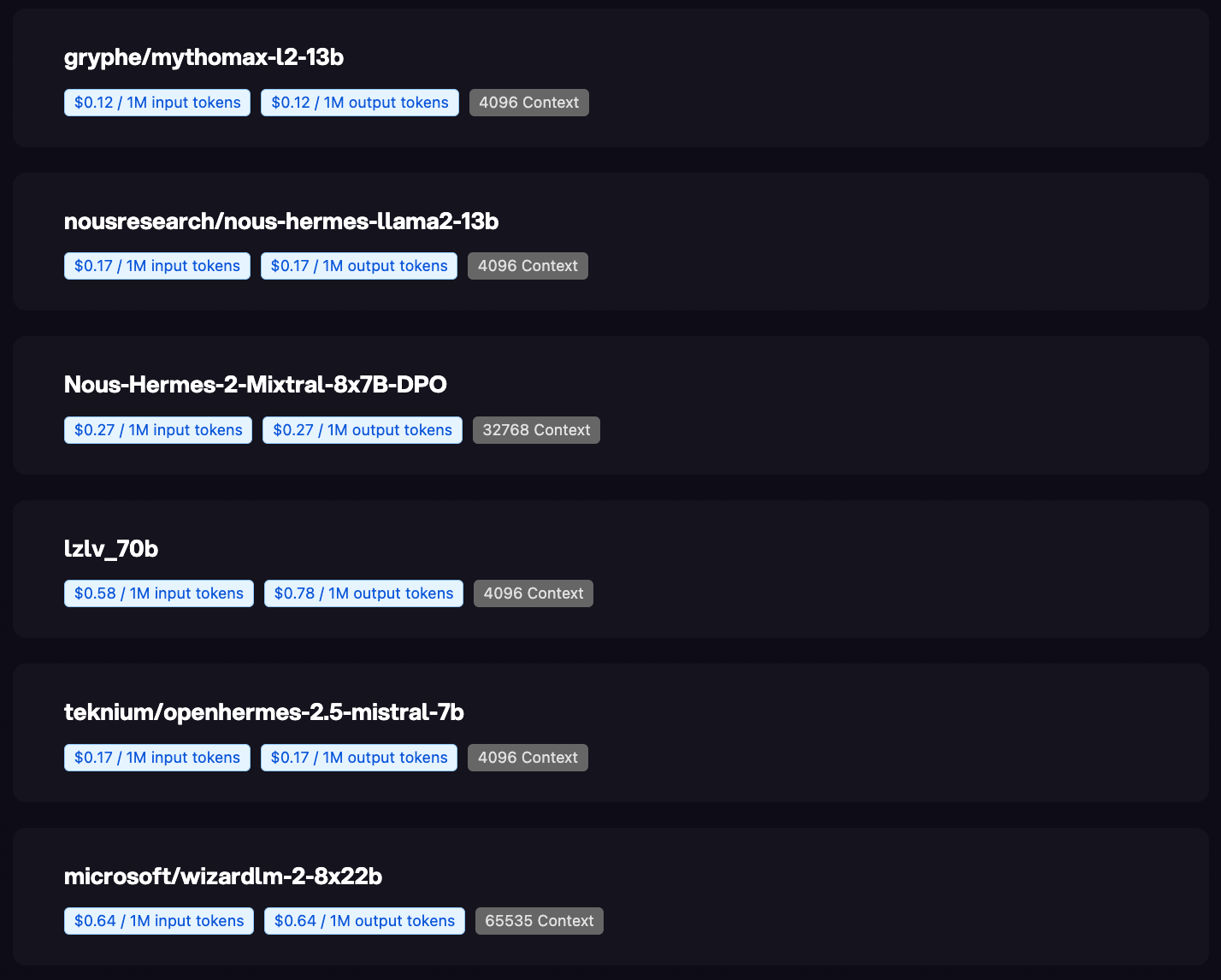

Novita AI provides developers with cost-effective LLM API with strong performances. Here are the popular LLM APIs on the Novita AI platform:

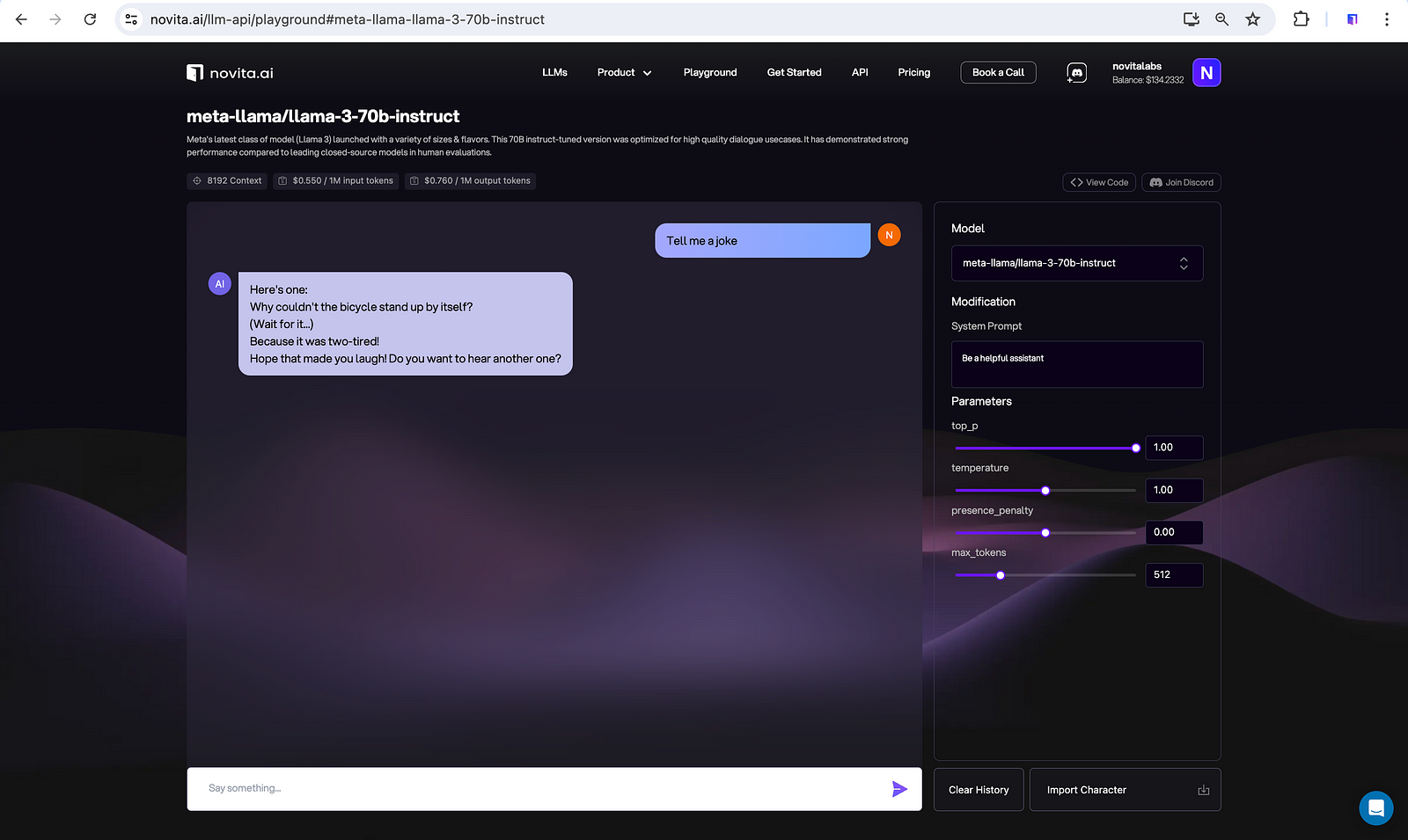

Llama-3–8b-instruct & Llama-3–70b-instruct on Novita AI

Meta’s latest class of model (Llama 3) launched with a variety of sizes & flavors. Llama-3–8b-instruct and Llama-3–70b-instruct were optimized for high quality dialogue use cases. They demonstrated strong performance compared to leading closed-source models in human evaluations.

Hermes-2-pro-llama-3–8b on Novita AI

Hermes-2-pro-llama-3–8b is an upgraded, retrained version of Nous Hermes 2, consisting of an updated and cleaned version of the OpenHermes 2.5 Dataset, as well as a newly introduced Function Calling and JSON Mode dataset developed in-house.

Mistral-7b-instruct on Novita AI

Mistral-7b-instruct is a high-performing, industry-standard 7.3B parameter model, with optimizations for speed and context length.

Mythomax-l2–13b on Notiva AI

The idea behind this merge — Mythomax-l2–13b — is that each layer is composed of several tensors, which are in turn responsible for specific functions. Using MythoLogic-L2’s robust understanding as its input and Huginn’s extensive writing capability as its output seems to have resulted in a model that exceeds at both, confirming my theory. (More details to be released at a later time).

Openhermes-2.5-mistral-7b on Novita AI

Openhermes-2.5-mistral-7b is a state-art Mistral Fine-tune, a continuation of OpenHermes 2 model, which trained on additional code datasets.

Check Novita AI website for more info about pricing and other available models.

Additionally, you can try our LLMs for free on Novita AI Playground.

Implementing AI Answering Questions in Your Projects

As language models continue to advance, developers can leverage powerful AI question-answering capabilities to enhance a wide range of applications. Here are some scenarios where you can utilize large language model (LLM) APIs to enable AI-powered question answering:

Customer Support ChatBots

Integrate AI question-answering into your customer service chatbots to provide quick and accurate responses to user queries. This can lead to faster issue resolution, improved customer satisfaction, and reduced load on human support agents.

Knowledge Management Systems

Develop knowledge management solutions that allow users to ask questions and retrieve information from your organization’s internal knowledge base or other data sources using AI-powered question answering.

Educational Applications

Integrate AI question-answering into e-learning platforms, tutoring systems, and virtual classrooms to give students personalized support, answer their questions, and provide explanations on course materials.

Research and Analysis Tools

Empower researchers, analysts, and subject matter experts with AI question-answering features that can quickly synthesize information from large volumes of data, documents, and research papers to aid in their work.

In-app User Assistance

Embed AI question-answering capabilities directly into your application’s user interface, allowing users to get immediate answers to their questions without having to navigate complex help documentation or search through community forums.

AI Companion Chat

Develop AI-powered chatbots that can engage in open-ended conversations, provide companionship, and answer a wide range of questions on various topics, creating a more personalized and enriching user experience.

Conclusion

In conclusion, AI’s ability to answer questions is driven by advanced NLP techniques, including neural networks and transformer architectures. We’ve seen how AI systems have evolved from simple chatbots to sophisticated models capable of nuanced conversations. Evaluating these systems involves various benchmarks, and effective interaction requires thoughtful prompt engineering. As AI continues to advance, its applications in customer support, education, research, and more will become increasingly impactful. By understanding these key points, you can better appreciate the remarkable capabilities and future potential of AI in answering questions.

Novita AI is the all-in-one cloud platform that empowers your AI ambitions. With seamlessly integrated APIs, serverless computing, and GPU acceleration, we provide the cost-effective tools you need to rapidly build and scale your AI-driven business. Eliminate infrastructure headaches and get started for free — Novita AI makes your AI dreams a reality.