Ultimate Lora Training Guide

Discover the ultimate Lora training guide for comprehensive insights and expert tips. Elevate your knowledge with our latest blog.

Welcome to the ultimate guide for LoRA training! LoRAs, or Language Optimization and Recombinatoric Agents, are advanced models that have revolutionized the field of natural language processing (NLP). In this comprehensive guide, we will delve into the intricate details of LoRAs, their different types, the training process, troubleshooting common problems, and even advanced concepts. Whether you’re a beginner starting your journey with LoRAs or an experienced practitioner looking to enhance your skills, this guide will provide you with all the information and guidance you need.

Understanding LoRA

To truly comprehend the power of LoRAs, it’s important to understand the key concepts involved. LoRAs act as vast language models, capable of processing large datasets and generating new concepts. They utilize stable diffusion, network rank, network alpha, and attention layers of the model to optimize language generation. By grasping these fundamental aspects, you’ll be better equipped to harness the potential of LoRAs in your training process.

What are LoRAs?

LoRAs are advanced language models that excel in generating new textual concepts. They process vast datasets, learning from patterns and structures, to generate realistic and coherent text. One key concept in LoRAs is textual inversion, which involves inputting text and generating images based on the given text. This enables LoRAs to provide new perspectives and ideas through the textual data.

LoRAs are trained on large language models, such as the stable diffusion model, which ensures stable training progress. The base model serves as the starting point, and through iterative training, LoRAs fine-tune the model to generate specific style adaptations. The latent space, a key element of LoRAs, plays a crucial role in generating new concepts and exploring different textual variations.

The Significance of Stable Diffusion in LoRAs

Stable diffusion is a critical aspect of LoRA training. It refers to the training process where the model progressively learns from the dataset. A stable diffusion model requires a specific training rate, ensuring the model learns the dataset effectively. By carefully managing the training progress of stable diffusion models, we can optimize the generation of new concepts.

Large language models heavily rely on stable diffusion to capture complex patterns and semantic connections within the dataset. The stable diffusion process allows the model to gradually refine its understanding, resulting in more accurate and high-quality output. Additionally, stable diffusion models excel in specific style generation, allowing the model to adapt different art styles, image quality, and specific aesthetics.

The number of images used in the training process also plays a crucial role in stable diffusion model training. By carefully selecting the dataset and ensuring a large enough number of images, the stable diffusion process can better capture the diverse patterns present in the data, leading to improved model performance.

Different Types of LoRAs

LoRAs can be categorized into different types based on their specific functionalities and applications. Understanding the different types of LoRAs will help you determine the most suitable model for your needs. In this section, we will explore three main types of LoRAs: general purpose LoRAs, style/aesthetic LoRAs, and character/person LoRAs. Each type of LoRA has its unique capabilities and is trained to produce specific outputs, enabling you to generate text, images, and concepts tailored to your requirements.

General Purpose LoRAs

General purpose LoRAs serve as the foundation for various text generation tasks. They utilize a text encoder, large language models, stable diffusion models, and attention layers of the model to process textual data and generate new concepts. The training data used for general purpose LoRAs is diverse, encompassing a vast range of text sources such as books, articles, and websites.

To obtain better results with general purpose LoRAs, regularization images are incorporated into the model’s network rank. These regularization images help the model generalize better and produce more coherent output. The training script is a vital component of general purpose LoRAs, providing guidelines and settings for the training process. Network alpha, which balances the learning rate of the model, also plays a key role in the training of general purpose LoRAs.

Style/Aesthetic LoRAs

Style/aesthetic LoRAs focus on the generation of images with specific art styles and aesthetic qualities. By fine-tuning the base model, style/aesthetic LoRAs can adapt the image generation process to match the desired style or aesthetics. This includes parameters such as art style, specific style, and image quality, allowing the model to create images that satisfy the specified visual requirements.

In style/aesthetic LoRAs, the folder structure plays a crucial role in organizing the training images. The batch size, which determines the number of images processed in each training iteration, is also an important parameter to consider. Adjusting the training settings, such as learning rate and activation tags, further optimizes the style/aesthetic LoRA training process, ensuring the model captures the desired image characteristics accurately.

Character/Person LoRAs

Character/person LoRAs specialize in generating images of different poses, facial expressions, and styles, simulating the appearance of different individuals. These models excel at capturing the unique features and characteristics of specific individuals, enabling the generation of diverse and realistic images.

In character/person LoRAs, the default settings play a significant role in the output generation. Fine-tuning the parameters, such as image quality, amount of images used, trigger words for specific poses, and training rate, ensures the model’s training process is aligned with the desired results. By carefully adjusting these settings, character/person LoRAs can generate images that accurately represent the targeted individual or character.

Getting Started with LoRAs

Now that we have explored the different types of LoRAs, it’s time to dive into the process of getting started with LoRAs. This section will guide you through the essential steps and considerations to begin your journey in LoRA training. Whether you’re downloading pre-trained LoRAs or starting from scratch, these guidelines will provide a solid foundation for your training process.

Where to Download LoRAs?

To download LoRAs, start by selecting a source model. Ensure you consider training parameters and the number of images to impact the source model. To expand your LoRA collection, train new LoRA models for a larger library. New model generation is key to increasing the availability of LoRAs.

- Civitai.com: Most popular and recommended

- HuggingFace.co: Less popular. The problem is HuggingFace puts LoRAs in the same category as checkpoint models, so there’s no easy way to find them.

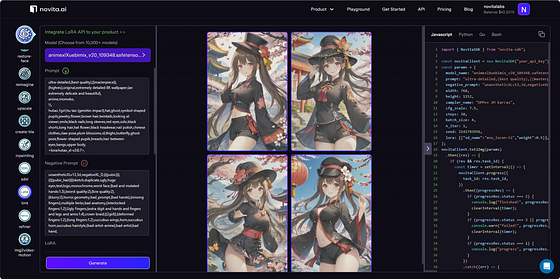

- novita.ai:novita.ai has a wide range of models and LoRA resources, which can customize the API library for AI image generation and editing, generate images in one stop and customize the stable diffusion model according to your vision.

Essential LoRA Setup Guidelines

To ensure the stability of the training process, it is essential to carefully follow the setup guidelines for LoRAs. The progress of model training is greatly influenced by the setup parameters of LoRAs, including the number of training iterations and max resolution settings. Moreover, the quality of LoRA training is also impacted by different art style settings. Therefore, paying close attention to these essential setup guidelines is crucial for achieving successful and high-quality results in LoRA training.

Preparing for LoRA Training

Dataset & Captioning Essentials: Ensuring the availability of diverse and relevant datasets for comprehensive training. Understanding the Training Script/UI: Familiarizing with the interface to navigate and supervise the training process effectively.

Dataset & Captioning Essentials

When preparing for LoRA training, it is essential to gather a stable diffusion of images for the training data. Focus on generating high-quality images and ensure diversity by using different poses, facial expressions, and triggers for optimal results. Determine the specific number of images required to effectively train the LoRA model. Additionally, explore the training image batch size and consider the number of epochs to achieve the desired outcomes. It’s crucial to maintain a well-organized dataset with proper folder names to streamline the process of captioning and training the LoRA model.

Understanding the Training Script/UI

Familiarizing yourself with the training script is essential before commencing the training process. Understanding the art style and specific settings within the training UI allows for customization based on specific requirements. Whether choosing default settings or making adjustments, it’s crucial to align the model training with the desired image resolution. Leveraging the training progress to monitor the model’s learning rate ensures effective training. By doing so, the model can adapt to different styles and settings, ensuring improved performance.

Training Parameters for LoRAs

To ensure effective model training, it’s crucial to adjust the stable diffusion model settings and number of repeats. Specify the model output name, network rank, and network alpha. Optimize the activation tags and key parameters for lora training, and explore the lora tab along with the latent space. Understanding the textual inversion in lora training and working with vast datasets is essential for a successful training process.

Understanding Learning Rate in LoRA Training

To optimize the model’s training, it is crucial to gain insights into the impact of the learning rate on model training. Adjusting the learning rate based on the model’s performance helps stabilize it for better training results. Experimenting with different learning rates allows for the optimization of the model’s training. Additionally, utilizing the learning rate scheduler is essential for efficient LoRA model training. The folder name can also play a significant role in organizing and managing the model’s learning rate throughout the training process.

How to Avoid Overfitting in LoRA Training?

To avoid overfitting in LoRA training, implement regularization techniques and manage the amount of images used for training. Utilize trigger words and specific style during the training process. Understand different concepts of overfitting in LoRA models and explore the number of times the model is trained.

Choosing the “Best” Epoch for Training

When identifying the optimal training epoch, it’s crucial to analyze the model’s training progress thoroughly. This involves assessing convergence and stability to make an informed selection. Utilizing evaluation metrics and validation data provides valuable insights into determining the best epoch for training. Visualization tools play a pivotal role in comparing different epochs, aiding in the decision-making process. Additionally, evaluating the model’s performance on both the training and validation sets is essential for identifying the “best” epoch effectively. By considering these factors, you can ensure that the chosen epoch aligns with the training requirements and contributes to the overall success of the model.

Troubleshooting Common Problems in LoRA Training

To ensure an optimal lora training experience, it’s essential to troubleshoot common problems that may arise during the model training process. This involves identifying and resolving issues related to the training parameters and settings, addressing any errors or inconsistencies encountered, and debugging the model training process. It’s crucial to align the model training process with the intended lora model output and seek solutions for training-related challenges to optimize the overall training experience. By ensuring that the training process is free from common problems, such as overfitting or underfitting, the lora model can be trained effectively to achieve the desired outcomes.

Advanced Concepts in LoRA Training

Multi-concepts and Balancing Datasets: When training LoRAs, it’s crucial to understand how to manage multi-concepts and balance datasets effectively. This involves organizing data into different categories or folders to ensure a diverse and well-distributed dataset, essential for producing high-quality LoRAs. The folder name will play a key role in categorizing and managing the datasets, allowing for efficient processing during the training phase.

Multi-concepts and Balancing Datasets

To enhance the training guide for a new LORA model, it’s crucial to experiment with the training parameters for improved image generation. Regularizing images in the training process is essential for achieving a stable diffusion model. Additionally, exploring the number of repeats in training models and balancing the amount of images for different poses can significantly impact the overall performance. It’s imperative to ensure that the folder name reflects the multi-concepts present in the datasets, facilitating efficient organization and management.

What is the Importance of the VAE in LoRA Training?

The VAE plays a crucial role in LoRA training by facilitating stable diffusion models and allowing for the incorporation of specific styles and concepts. It aids in training large language models, supports textual inversion, and enables exploration of the latent space for new ideas.

Imagine you have an image, say 512x512 pixels. To represent this image, you need to consider 4 color channels (RGBA) for each of the 512x512 pixels. That’s a total of 4 x 512 x 512 = 1,048,576 individual values. Handling such a massive amount of data for every image would be highly inefficient and computationally expensive. To tackle this, data scientists developed methods to reduce the data size of these images, and one effective solution is the latent space.

Imagine a vast library where every possible image you can think of is stored in a compact form. Latent space is like this library for Stable Diffusion. It’s a mathematical space where complex data (like images) are transformed into a simpler, compressed form. This allows the model to efficiently work with and manipulate images.

The tool used to make this compression possible is the Variational Autoencoder (VAE). The VAE learns to compress images down into this latent space and reconstruct them back to their original form.

Conclusion

In conclusion, LoRAs are a powerful tool for various applications, whether it be in the field of art, design, or character creation. Understanding and implementing stable diffusion is crucial to ensure accurate and consistent results. There are different types of LoRAs available, ranging from general purpose to more specific styles and characters. To get started with LoRAs, you can easily download them and follow essential setup guidelines. Preparing for LoRA training involves gathering the right dataset and understanding the training script/UI. It is important to set proper training parameters and avoid overfitting. Choosing the best epoch for training and troubleshooting common problems are part of the training process. Advanced concepts like multi-concepts and the use of VAE further enhance the capabilities of LoRAs. Overall, LoRAs provide endless possibilities and can revolutionize various industries with their creative potential.

novita.ai provides Stable Diffusion API and hundreds of fast and cheapest AI image generation APIs for 10,000 models.🎯 Fastest generation in just 2s, Pay-As-You-Go, a minimum of $0.0015 for each standard image, you can add your own models and avoid GPU maintenance. Free to share open-source extensions.

Recommended reading